Guide To AWS Load Balancers

Learn more about our migration from Kubernetes Built-in NLB to AWS Load Balancer

Benefits of Using the AWS Elastic Load Balancing

The major use for an ELB is to ensure the elastic and high availability of your resources. There are four types of forwarding rules that you can assign to a load balancer: HTTP, HTTPS, TCP, and UDP.

Some of the benefits of ELB include the following:

- High availability and distribution of website traffic to multiple destinations or targets.

- High security through user authentication and SSL/TLS decryption functions.

- Handling drastic changes in website traffic without human intervention.

- Increased visibility of your applications through continuous monitoring and auditing.

- Hybrid load balancing, which can help with the migration of resources to the cloud.

Types of AWS Load Balancers

As of today, AWS supports four types of load balancers: Classic Load Balancers, Network Load Balancers (NLBs), Application Load Balancers (ALBs), and Gateway Load Balancers (GWLBs). This article will focus on the first three, as their sole purpose is to distribute incoming traffic across multiple targets.

The Classic Load Balancer is a previous-generation load balancer and, currently, is only recommended for scenarios where you still have instances running on an EC2-Classic network; if you do not, then AWS recommends that you use an NLB or an ALB, as the features provided by the Classic Load Balancer can be replaced by either.

Classic Load Balancer

This is the simplest form of load balancer that was originally used for classic EC2 instances. It operates both at the connection level and the request level. The main disadvantage of this type of load balancer is that it does not support some features, such as host-based routing or route-based routing. Once configured, the load balancer distributes the load among the servers regardless of what is on the server, which, in certain situations, can reduce efficiency and performance.

Application Load Balancer

The Application Load Balancer (ALB) is a load balancer at the seventh layer of the OSI model, and it can route the network packet to different backend services based on the content of the network packet. Unlike running an elastic load balancer for each service, an ALB can balance network traffic for multiple backend services. For example, a URL containing "/api" and a URL containing "/signup" will be routed to different backend services.

This type of load balancer is a new generation of load balancer from AWS that provides native support for HTTP/2 and WebSocket protocols. By multiplexing requests over a single connection, HTTP/2 reduces network traffic. WebSocket allows developers to configure persistent TCP connections between client and server while minimizing power consumption.

Compared with the Classic Load Balancer, the ALB supports more features, such as the following:

- Support for path-based/host-based routing.

- Ability to register IP addresses as targets.

- Support for calling Lambda functions to serve HTTP(S) requests.

- Support for SNI.

- Support for load balancing between multiple ports of a single instance to provide enhanced container support.

Network Load Balancer

If the application needs to achieve extreme performance and static IP, AWS recommends that you use a Network Load Balancer (NLB).

The NLB is optimized to handle bursty, unstable traffic patterns and rapidly fluctuating workloads. Providing high throughput, NLB can scale to handle millions of requests per second. So if your workload requirements are to handle bursty workflows at the transport layer or require extreme network performance, then you may consider using an NLB.

In addition, NLB automatically provides a static IP that can be used as the frontend IP of the balancer itself. It is also possible to assign a resilient IP address to each subnet enabled for the load balancer. This allows the NLB to be incorporated into your existing firewall security policy and avoid the problems associated with DNS caching.

How to Configure Application Load Balancers

Next, learn how to create an ALB through the AWS Management Console, a web-based interface.

Decide which two Availability Zones you'll utilize for your EC2 instances before you start. In each of these Availability Zones, configure your virtual private cloud (VPC) with at least one public subnet. The load balancer is configured using these public subnets. You can instead launch your EC2 instances in one of these Availability Zones' other subnets.

In each Availability Zone, start at least one EC2 instance. Make each EC2 instance have a web server installed, such as Apache. Ensure that these instances' security groups enable HTTP access on port 80.

Then complete the following steps.

Select a Load Balancer Type

To create an ALB, complete the following steps:

- Open the Amazon EC2 console.

- Choose a region on the navigation bar for your load balancer. Be sure to select the same region that you used for your EC2 instances.

- Choose Load balancers on the navigation pane under LOAD BALANCING.

- Choose "Create load balancer".

- Choose "Create for ALB".

Complete Basic Configuration

To configure your cloud load balancer, complete the following steps:

- Enter a name for your load balancer in the Load balancer name.

- Keep the default values for Scheme and IP address type.

- Select the VPC that you used for your EC2 instances under Network mapping, for mappings. Select the Availability Zone for each one that you have used to launch your EC2 instances; then select the public subnet for that Availability Zone.

- Keep the default for Listeners, which is a listener that accepts HTTP traffic on port 80.

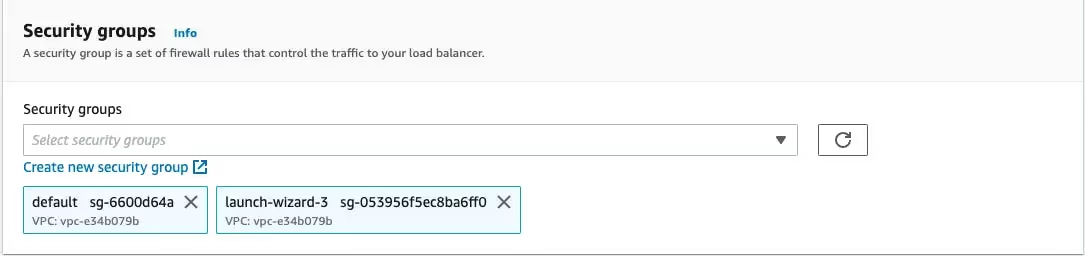

#Configure a Security Group

To have ELB configure a security group for your load balancer on your behalf, complete the following steps:

- Choose" Create new security group" under the "Security groups section".

- Type a name and description for the security group or keep the default name and description. This new security group contains a rule that allows traffic to the load balancer listener port selected on the Configure load balancer page.

Configure Your Target Group

Create a target group to use in request routing. The default rule for your listener routes requests to targets registered in that group. The load balancer checks the health of the targets in this target group with the health check settings defined for the target group.

To configure your target group, complete the following steps in the Configure Routing section:

- Keep the default New target group for the Target group.

- Enter a name for the new target group under Name.

- Keep the default target type (Instance), protocol (HTTP), and port (80).

- Keep the default settings for Health checks.

- Choose Next.

- Choose "Create target group".

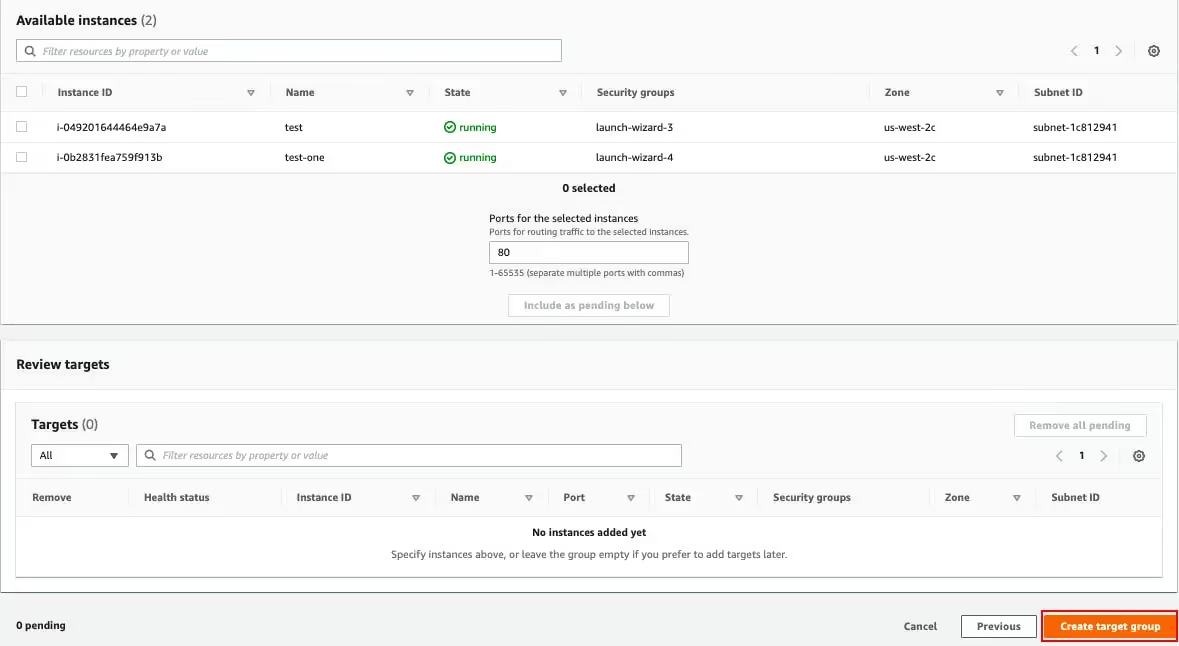

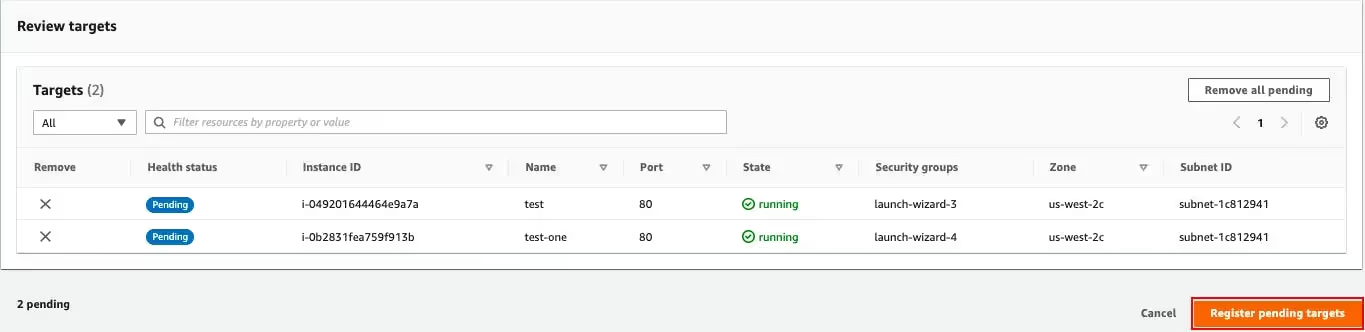

Register Targets with Your Target Group

To register your instances with the target group, complete the following steps on the Register Targets page:

- Select one or more instances under Instances.

- Keep the default port (80) and choose Include as pending below.

- Choose Register pending targets when you have finished selecting instances.

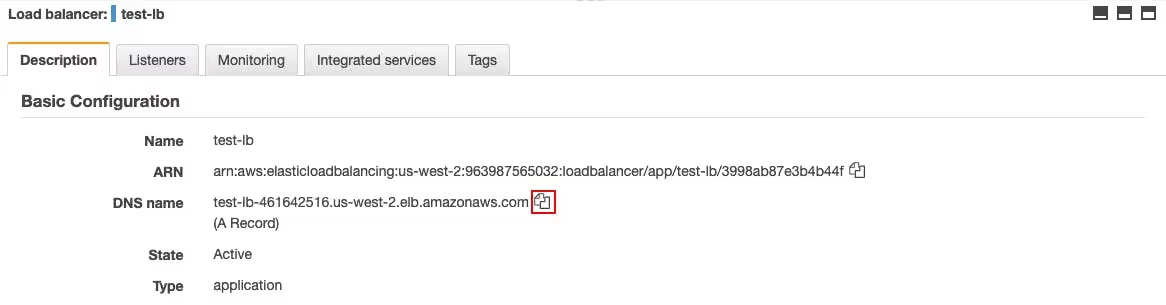

Create and Test Your Load Balancer

Review the summary of your settings before creating your load balancer. After creating the load balancer, verify that it's sending traffic to your EC2 instances.

To create and test your load balancer, complete the following steps:

- Choose "Create load balancer" after the summary section.

- Choose "Close" after you are notified that your load balancer was created successfully.

- Choose "Target groups" on the navigation pane, under LOAD BALANCING; and then select the newly created target group.

- Check the "Targets" tab to verify that your instances are ready. If the status of an instance is initial, it's probably because the instance is still in the process of being registered or it has not passed the minimum number of health checks to be considered healthy. After the status of at least one instance is healthy, you can test your load balancer.

- Choose Load balancers on the navigation pane, under LOAD BALANCING.

- Select the newly created load balancer.

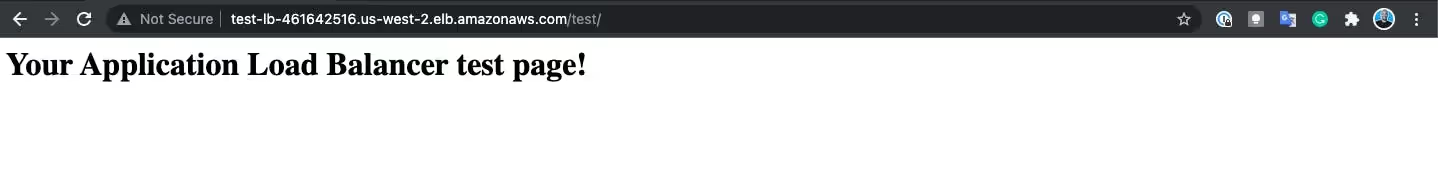

- Copy the DNS name of the load balancer from the Description tab. Paste the DNS name into the address field of an Internet-connected web browser. If everything is working, the browser will display the default page of your server.

Conclusion

In this article, you learned basic information about the load balancers used in AWS (excluding GWLBs). You also learned to create an ALB with a single EC2 instance.

Some key points to remember are as follows:

- Load balancers are used for increasing the availability and fault tolerance of your application.

- Load balancers in AWS come in four flavors: the classic load balancer, ALB, NLB, and GWLB.

- You should ideally use ALB or NLB since the classic load balancer is now deprecated.

- NLB can handle bursty workloads and millions of requests per second.

- ALB has support for path-based or host-based routing and can balance traffic for multiple backend services.

The unique needs of your application use case—such as whether you need end-to-end SSL/TLS encryption or whether you want path-based or host-based routing—will determine the type of load balancer that is best for you.

Suggested articles

.webp)

.svg)

.svg)

.svg)