What is Kubernetes Deployment? Guide How to Use

Kubernetes has become an indispensable tool in the world of modern application deployment. As a powerful system for managing containerized applications, it streamlines the process of deploying, scaling, and operating applications across clusters of hosts. This article will serve as a comprehensive guide to understanding Kubernetes deployments, a core feature of Kubernetes. We will discuss managing applications with Deployments, various deployment strategies, and the best practices for secure and efficient Kubernetes deployments. After reading this guide, you should have a solid understanding of Kubernetes deployments, making it easier for you to take advantage of their full potential in your application deployment processes.

Morgan Perry

October 8, 2023 · 10 min read

#Understanding Kubernetes Deployments

#Definition of Kubernetes Deployment

A Kubernetes Deployment is a powerful tool in container orchestration. It allows you to manage the lifecycle of containerized applications, ensuring that a specified number of replicas of your application are running at any given time. Deployments automate the process of updating, scaling, and rolling back your applications, making the management of containers more efficient and reliable.

#How Kubernetes Deployment Works

Kubernetes Deployment operates through a series of steps:

- Deployment Creation: You define a desired state in a Deployment configuration. This includes details like the number of replicas, container images, and resources needed.

- Deployment Controller: Once the configuration is submitted, the Deployment Controller takes over. It checks the current state of the cluster and works to match the desired state.

- Updating Applications: When you update a Deployment, such as changing the container image, Kubernetes gradually updates the Pods to the new version while ensuring service availability.

- Rollback and Scaling: You can scale the number of replicas up or down. In case of issues, Kubernetes allows for easy rollback to a previous state.

#Key components of a Kubernetes deployment

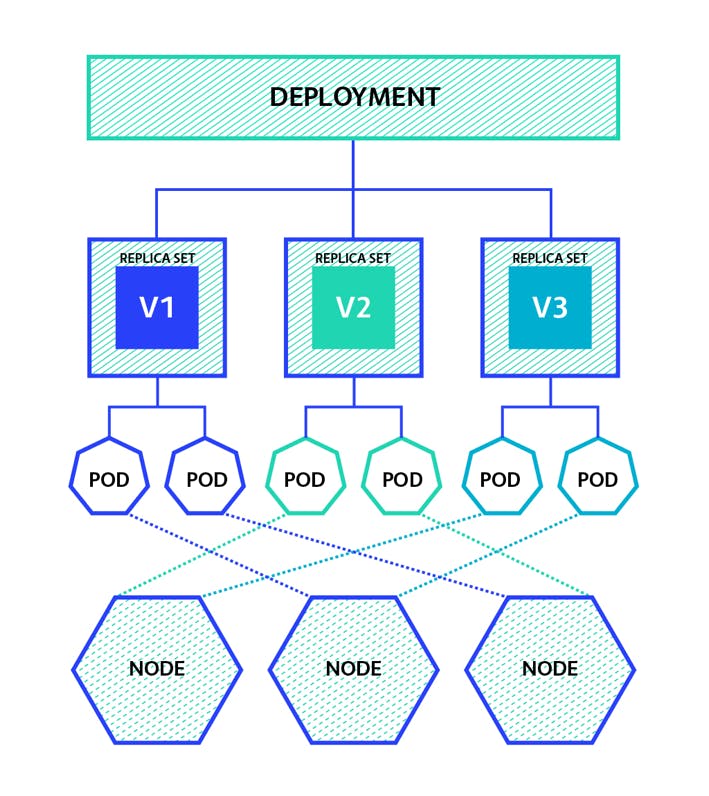

Here are the key components of a Kubernetes deployment:

- Pods: The basic unit of deployment in Kubernetes. A Pod represents a single instance of your application.

- ReplicaSets: Ensures that a specified number of Pod replicas are running at any time. It’s managed automatically by Deployments.

- Labels and Selectors: Used for organizing and controlling groups of Pods. Labels are key/value pairs attached to objects, and Selectors retrieve objects based on their labels.

- Service: An abstraction layer that provides network connectivity to a set of Pods for external communication.

- Volumes: Attach storage to your Pods, allowing data to persist beyond the lifespan of individual Pods.

- Namespace: Provides a mechanism for isolating groups of resources within a single cluster. E.g. production, staging, dev, etc.

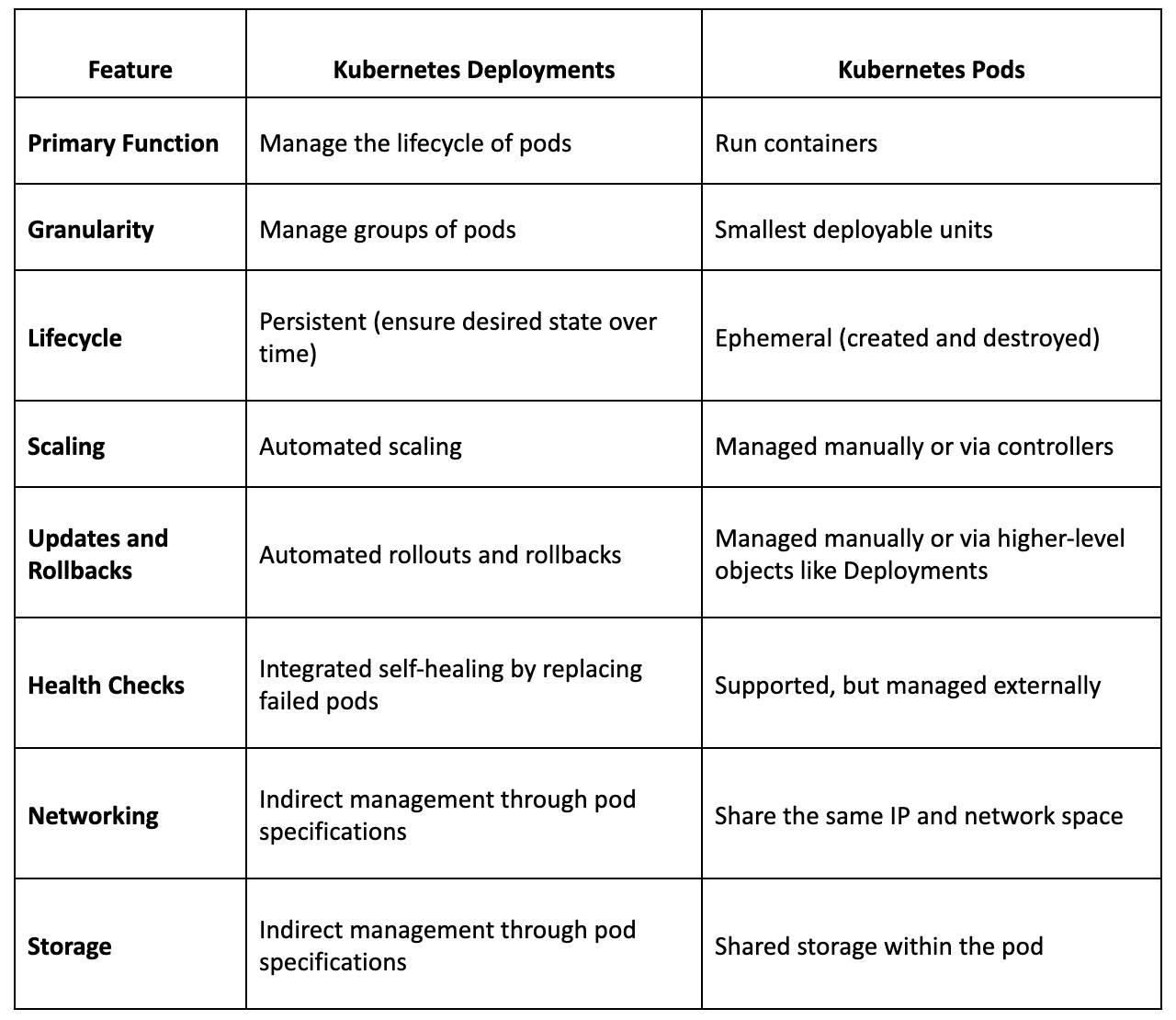

#Kubernetes Deployment vs. Pods

#Understanding pods in Kubernetes

Pods are the smallest deployable units in Kubernetes, often described as the backbone of Kubernetes architecture. A pod is a group of one or more containers with shared storage/network resources and a specification for how to run the containers. Key aspects of pods include:

- Shared Context: Containers in a pod share the same IP address and port space and can find each other via localhost. They also share mounted storage.

- Lifecycle: Pods are ephemeral in nature. They are created, assigned a unique ID (UID), and, if terminated, cannot be brought back in the same state.

#Contrasting Kubernetes Deployments and Pods

While pods are the basic building blocks, Kubernetes Deployments provide a higher-level management functionality. Understanding the differences is crucial:

- Lifecycle Management: Deployments manage the lifecycle of pods. They ensure that a specified number of pods are running at any given time.

- Scaling and Updating: Deployments enable easy scaling of pods and rolling updates to update pod versions without downtime.

- Self-healing: Deployments can restart pods that fail, replace them, and kill pods that don't respond to a user-defined health check.

#Managing pod-based applications with deployments

Deployments simplify the process of managing pod-based applications. Here's how:

- Automated Rollouts and Rollbacks: Deployments automate updates to applications or its configuration changes. If something goes wrong, Kubernetes automatically rolls back the change.

- Declarative Updates: Kubernetes allows you to describe the desired state in a Deployment, and the system works to maintain this state.

- Scaling: Deployments allow for scaling up or down the number of pods as needed.

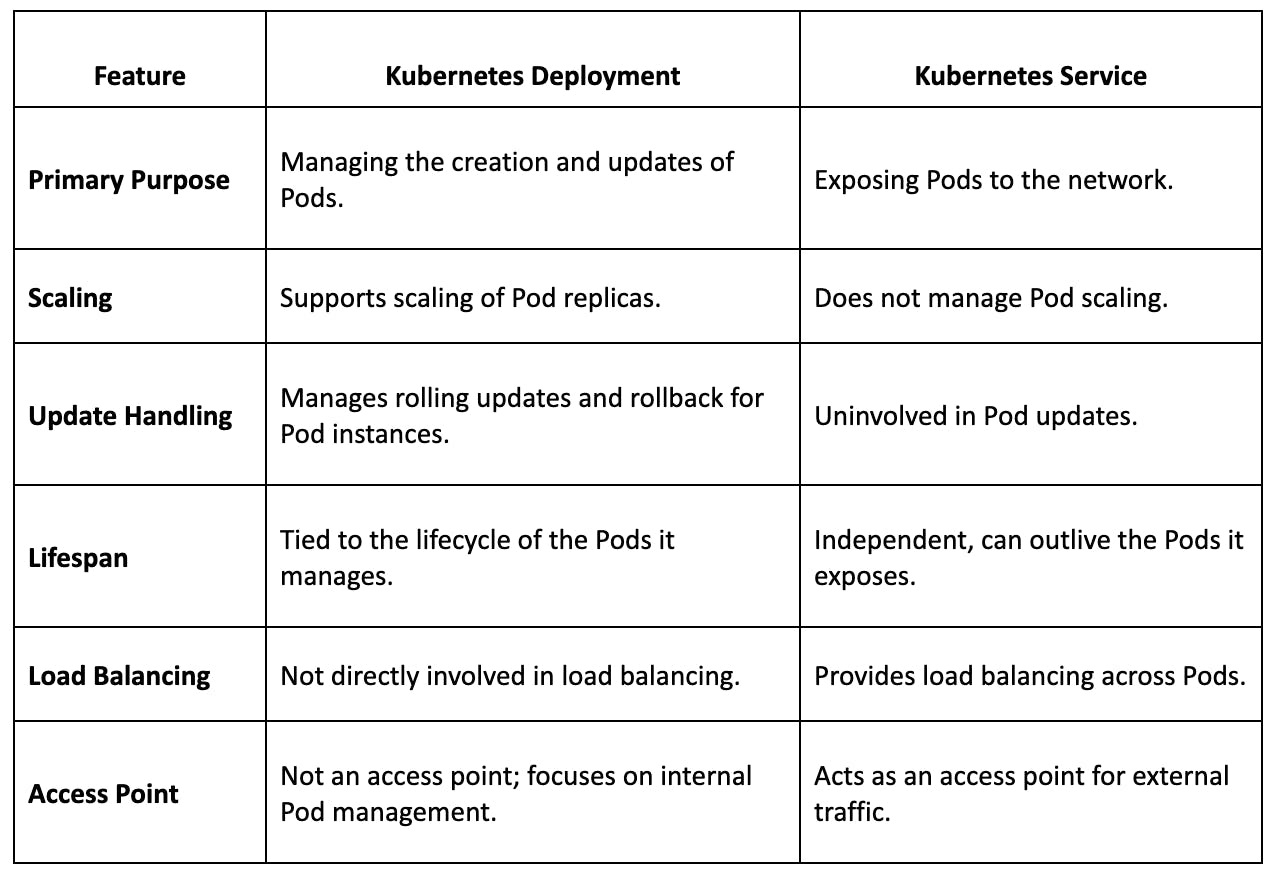

#Kubernetes Deployments vs. Services

#Understanding Kubernetes services

Kubernetes Services are a critical concept in Kubernetes, acting as an abstraction layer to expose applications running on a set of Pods. Services enable network access to a set of Pods, often through a load balancer. They ensure that network requests are distributed among the Pods in a balanced manner. They play a key role in managing how external and internal traffic reaches the Pods in a Kubernetes cluster.

Key aspects of Kubernetes Services include:

- Stable Network Interface: They provide a stable IP address and port number for accessing the Pods, regardless of the changes in the Pod instances.

- Load Balancing: Services distribute network traffic evenly across all Pods in the service, enhancing performance and reliability.

- Service Discovery: Services can be discovered within the cluster through Kubernetes DNS or environment variables, facilitating communication between different components.

#Differences between Deployments and Services

Here is a summary table showing the difference between deployments and services.

#Use Cases for Deployments and Services

Here are some specific use cases for deployments and services.

Kubernetes Deployments

- Accelerated Product Deployment: Facilitates rapid rollout of new products or updates, crucial for maintaining market competitiveness.

- Resource Optimization: Enables dynamic scaling for efficient resource utilization, optimizing infrastructure costs.

- High Application Reliability: Automatically replaces failed Pods, ensuring continuous service availability and customer trust.

Kubernetes Services

- Secure and Scalable Access: Provides a secure gateway for applications, essential for handling varying traffic volumes and maintaining secure access.

- Efficient Load Balancing: Distributes network traffic across Pods, preventing overloads during peak periods and ensuring consistent performance.

- Microservices Architecture Support: Enhances a microservices approach by enabling easy service discovery and inter-service communication, leading to improved agility and maintenance.

#Deployment Strategies in Kubernetes

#Overview of Deployment Strategies

1. Rolling Update

- Description: Gradually replace the old version of the pod with the new version without downtime.

- Usage: Ideal for non-critical updates where minor issues in new versions are acceptable.

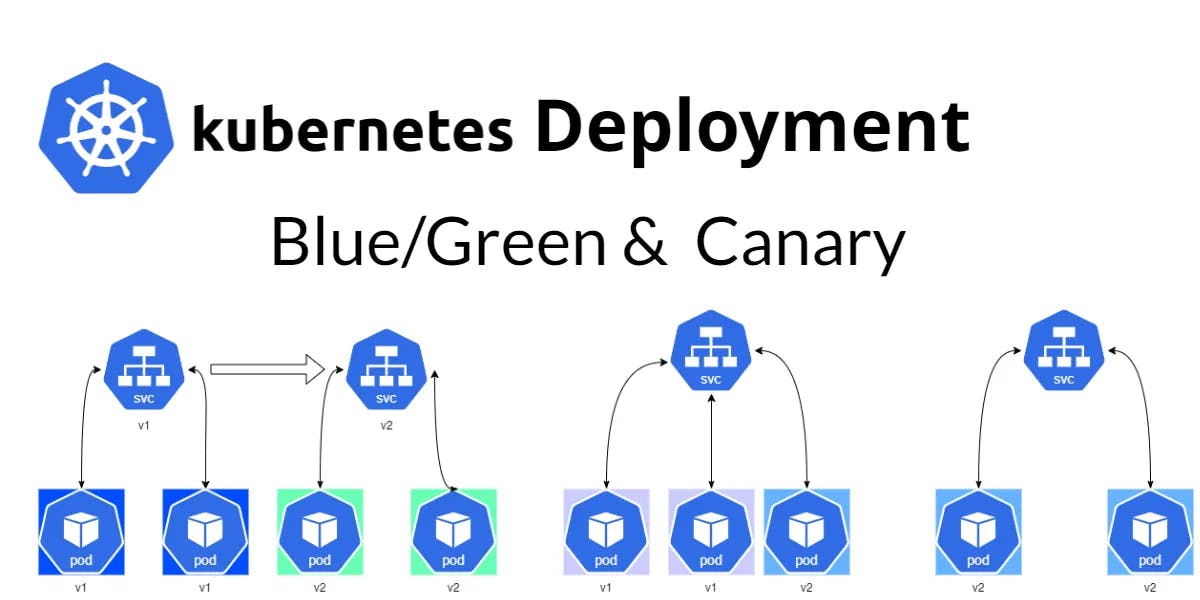

2. Blue-Green Deployment

- Description: Two identical environments are maintained; one (Blue) is the current production, and the other (Green) is the new version.

- Usage: Suited for critical applications requiring extensive testing in a production-like environment before going live. Expensive because of redundant infrastructure.

3. Canary Releases

- Description: Releases the new version to a small subset of users before rolling it out to the entire user base.

- Usage: Best for user-centric applications where feedback and user experience are critical for the update.

#Best practices for choosing a deployment strategy

- Understand Your Application's Needs: Evaluate factors like criticality, user base, and downtime tolerance.

- Risk Assessment: Higher risk changes may require Blue-Green deployment for safe rollbacks.

- Resource Availability: Assess if you have the necessary resources (like additional environments for Blue-Green) for the chosen strategy.

- Feedback Loop: For applications requiring immediate user feedback, consider Canary Releases.

- Automated Testing: Ensure robust automated testing for smoother deployment, especially for Rolling Updates and Canary Releases.

#How to implement these strategies in Kubernetes

1. Rolling Update

- Utilize Kubernetes' built-in RollingUpdate strategy in the Deployment resource.

- Define parameters like maxUnavailable and maxSurge to control the update process.

- Monitor the rollout status using kubectl rollout status.

2. Blue-Green Deployment

- Prepare two separate but identical environments - Blue and Green.

- Use Kubernetes services to switch traffic from Blue to Green.

- Implement a rollback plan to revert to Blue if issues arise in Green.

3. Canary Releases

- Deploy the new version to a subset of pods within the same environment.

- Leverage Kubernetes service routing to direct a portion of the traffic to the new version.

- Gradually increase the traffic to the new version based on monitoring and feedback.

#Monitoring and Managing Deployments

#Tools and techniques for monitoring Kubernetes Deployments

- Prometheus and Grafana: Use Prometheus for collecting metrics and Grafana for visualizing them. This combination provides a powerful toolset for monitoring Kubernetes clusters.

- Kubernetes Dashboard: Offers a basic, user-friendly interface for monitoring the health and performance of your cluster.

- Elastic Stack (ELK): Leverage ElasticSearch, Logstash, and Kibana for logging and monitoring. This stack is particularly useful for aggregating and analyzing large volumes of log data.

#How to update and scale deployments

- Rolling Updates: Safely update the application without downtime. Kubernetes replaces pods incrementally with new versions.

- Scaling: Manually scale your deployments by changing the number of replicas in your deployment configuration. Alternatively, use Horizontal Pod Autoscaler for automatic scaling based on CPU or memory usage.

- Blue-Green Deployment: This strategy involves running two identical environments, but only one (Green) serves the live traffic. After updating the Blue environment, switch the traffic. It's excellent for zero-downtime deployments.

#Managing the health and resources of deployments

Here is how you can manage the health of your deployments.

- Readiness and Liveness Probes: Configure these probes to let Kubernetes know when your application is ready to serve traffic (Readiness) and if it's functioning correctly (Liveness).

- Resource Limits and Requests: Define CPU and memory requests and limits for your pods to ensure efficient resource usage and prevent one application from consuming all available resources.

- Node Affinity and Taints: Use these features to control which nodes your pods should be scheduled on based on factors like performance requirements or data locality.

#Common Issues and Troubleshooting

#Common issues faced during Kubernetes deployments

- Configuration Errors: Incorrect or incomplete configurations are common, often due to syntax errors or misconfigured resource limits.

- Networking Issues: Problems with network policies, service discovery, or ingress controllers can disrupt communication between pods.

- Persistent Storage Challenges: Issues in attaching or mounting persistent volumes can lead to data loss or downtime.

- Resource Limitations: Insufficient CPU, memory, or storage can cause pod failures or evictions.

- Security Concerns: Misconfigurations in role-based access control (RBAC) or secrets management can lead to vulnerabilities.

#Troubleshooting tips and solutions

- Review Configuration Files: Use YAML linters or Kubernetes schema validation tools to catch syntax errors.

- Test Network Policies: Isolate network issues by temporarily simplifying network policies. Use tools like Calico for network troubleshooting.

- Persistent Storage Checks: Ensure storage class definitions and persistent volume claims are correct. Check logs for mounting errors.

- Monitor Resource Usage: Utilize Kubernetes monitoring tools to track pod resource consumption and adjust limits accordingly.

- Audit Security Settings: Regularly review and update RBAC policies. Use secret management tools like HashiCorp Vault for handling sensitive data.

- Gradual Upgrades: Test upgrades in a non-production environment. Read release notes for potential breaking changes.

#Best practices to avoid these issues

- Use Version Control for Configuration: Track changes in configurations using Git to rollback if needed.

- Implement CI/CD Pipelines: Automate deployments with continuous integration and continuous deployment to catch issues early.

- Regularly Update and Patch: Keep Kubernetes and its dependencies updated to avoid known bugs and vulnerabilities.

- Implement Monitoring and Logging: Use Prometheus and Grafana for monitoring and Elasticsearch or Fluentd for logging.

- Plan Capacity and Scalability: Regularly assess resource needs and plan for scalability to handle load changes.

#Security considerations for Kubernetes deployments

#What are the Kubernetes security principles?

- Least Privilege Principle: Minimize access rights for users, applications, and services to only what is necessary.

- Defense in Depth: Implement multiple layers of security, such as firewalls, network policies, and secure access controls.

- Regular Updates and Patch Management: Keep Kubernetes and its components up-to-date to protect against vulnerabilities.

- Audit and Monitoring: Use tools like Prometheus and Grafana for continuous monitoring and logging to detect and respond to threats.

- Immutable Infrastructure: Minimize changes to running infrastructure, using container images and infrastructure as code to enhance security.

#Best practices for secure Kubernetes deployment

- Use Namespaces for Resource Isolation: Segregate resources using namespaces to prevent cross-environment interference.

- Implement Network Policies: Control pod-to-pod communication within the cluster to limit potential attack vectors.

- Enable Role-Based Access Control (RBAC): Define user roles and permissions to tightly control access to Kubernetes resources.

- Regularly Scan for Vulnerabilities: Incorporate tools like Clair for container and image scanning to identify security risks.

- Manage Secrets Securely: Handle sensitive data using Kubernetes secrets or external secret management tools.

#How Qovery helps in monitoring and management

Qovery simplifies the process of managing Kubernetes deployments through:

- Integrated Environment: Qovery provides an integrated environment that combines the power of Kubernetes with the simplicity of use.

- Application Observability: Offers tools for monitoring the performance and health of applications.

- Resource Optimization: Helps in optimizing resource usage, ensuring efficient deployment operations.

#Conclusion

In this comprehensive guide, we have explored Kubernetes deployment in detail. Key takeaways include the importance of Kubernetes in orchestrating containerized applications, the dynamic nature of Pods as the basic units of deployment, and the strategic use of deployments for lifecycle management, scaling, and self-healing of applications. Additionally, the guide discusses different deployment strategies and the significance of choosing the right approach based on your application needs.

As Kubernetes continues to evolve, staying updated with the latest trends and practices is crucial. We encourage readers to continue exploring and learning Kubernetes to utilize its power in deploying and managing applications in a cloud-native ecosystem.

Your Favorite Internal Developer Platform

Qovery is an Internal Developer Platform Helping 50.000+ Developers and Platform Engineers To Ship Faster.

Try it out now!

Your Favorite Internal Developer Platform

Qovery is an Internal Developer Platform Helping 50.000+ Developers and Platform Engineers To Ship Faster.

Try it out now!

.jpg?ixlib=gatsbyFP&auto=compress%2Cformat&fit=max)