Implementing Microservices on AWS with the Twelve-factor App – Part 2

Welcome to the second post in a series of “Implementing Microservices on AWS with the Twelve-factor App”. In the first post, we covered the areas around the codebase, configuration, code packaging, code builds, and stateless processes.

This article will go through the remaining areas for best practices in microservices. Let’s start the discussion with Port mapping!

Morgan Perry

May 15, 2022 · 6 min read.jpg?ixlib=gatsbyFP&auto=compress%2Cformat&fit=max)

Note that the best practices mentioned are from the perspective of containerized microservices, so some of the tips may apply exclusively to container-based applications.

#Port mapping

One of the best practices for containerized applications is that the application should be a self-contained app and not dependent on the execution environment to provide web server capabilities. That means the application should have an innate capability to install the webserver and bind to a port on the container. The best way to accomplish that is by exporting HTTP as a service and binding it to a container port so that it starts listening for any incoming requests on that port. Its implementation is language-agnostic; however, the solution will use dependency declaration to include a web server library in the application. Note that we have already discussed in the previous article that dependencies should be installed along with the code as portable services.

Talking specifically of containerized applications, the binding can be achieved through the “Expose” command in the docker file. Through ECS task definition, you can map the container port with the host port. AWS ECS has provided support for dynamic port mapping as well. You can follow this official AWS article for details.

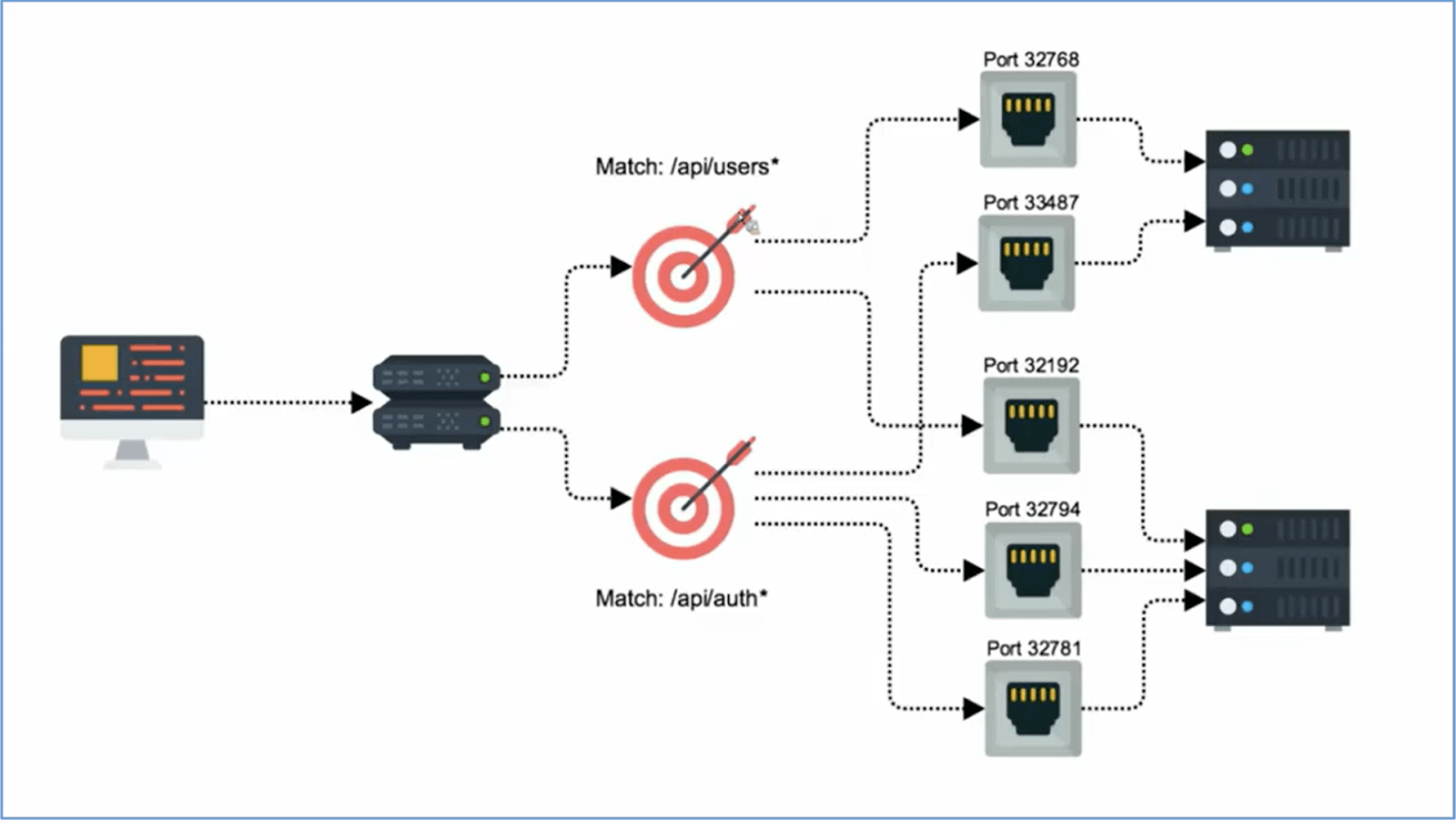

This solution can be further explained by the image below. If you look at it, you can see that, based on the URL, the request is routed to a particular service (distinguished by the port it has binding to). Multiple services with unique port bindings are running on a single container. And multiple containers can run on a single host (EC2 or Fargate). Note that the load balancer can divide the calls between different services listening on different ports. You do not necessarily specify the service name and port number; the ELB will automatically manage it through routing to the target group.

#Concurrency

The area of concurrency is more about scalability than the concurrency itself. The guideline is that your application should be able to scale out instead of scale up and that too on the process level. So, instead of replicating your whole application, your individual microservices and the processes which are running the microservices should be able to scale individually, based on the load on that particular type of service.

In ECS, this can be achieved through ECS task count at the service level. You specify the minimum number of ECS tasks that should be running all the time to ensure it can handle the load. You should set the count to at least two so that if one task is failed, the other one can keep serving the requests, and the failed task will be automatically restarted as well.

The task count in each service can be increased or decreased based on the scalability needs.

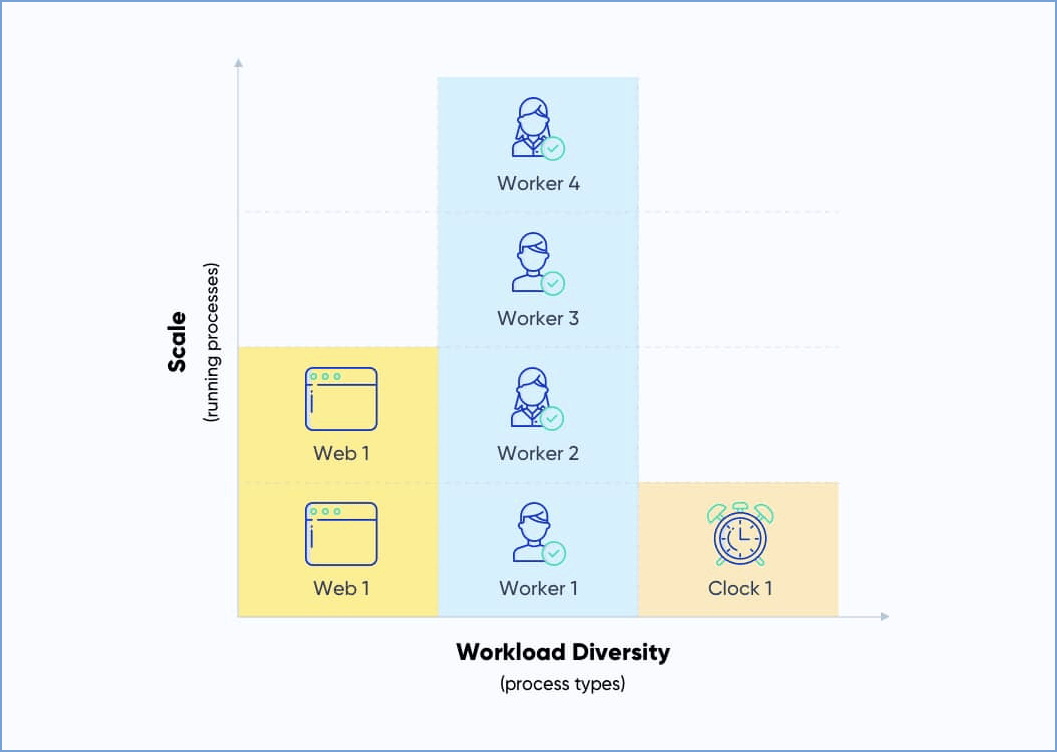

If you look at the image below, you can see that this application has three kinds of processes: web, worker, and clock. Based on the workload, it should be perfectly possible to scale just one type of process i.e. Worker. It will be just another container running in its own process in the container world. That makes this solution scalable and self-contained, which results in more concurrent processing. Implicitly to say, the capacity of the host is also a factor regarding how much concurrent processing can be performed. The beefier the host is, the more processing it can perform.

#Disposability

One advantage of containerized microservices is that the containers have built-in capability for disposability. That means the instances of a service (deployed in container) can be removed, scaled, and redeployed quickly without data loss. If you look at the point related to “Backing services” discussed in previous article, you can see that external data providers (database, cache etc. ) help you achieve that. The guideline states the following:

- The shutdown should be graceful, and it should also handle abrupt termination. Please see this official article for achieving a graceful shutdown on AWS ECS. It is the responsibility of the container to finish any existing requests which are in progress. Also, it needs to stop accepting new requests as well.

- You should be able to spin up and remove service instances quickly, without any delay. For fast startup of docker containers, you can follow this AWS article on achieving fast startup.

- Service instance should be able to withstand sudden failure.

#Dev/Prod Parity

The twelve-factor app recommends that all the production and non-production environments be as similar as possible. That means following should possibly be the same across all the environments:

- Database. Ideally, the production database should be synced back to the staging or UAT database as frequently as possible, at least on a weekly basis. This is especially important in data-intensive applications.

- Backing services. Apart from database, any caching solution or any cloud-based data store like firebase should also be used with a non-production environment same way it is used with production.

- Integration. Third-party integrations should also be integrated into the staging and UAT environment in the same way as the production.

- Tools. The tools used for production should also be used for the non-production environment. That includes the IDE, testing tools, scripts, etc.

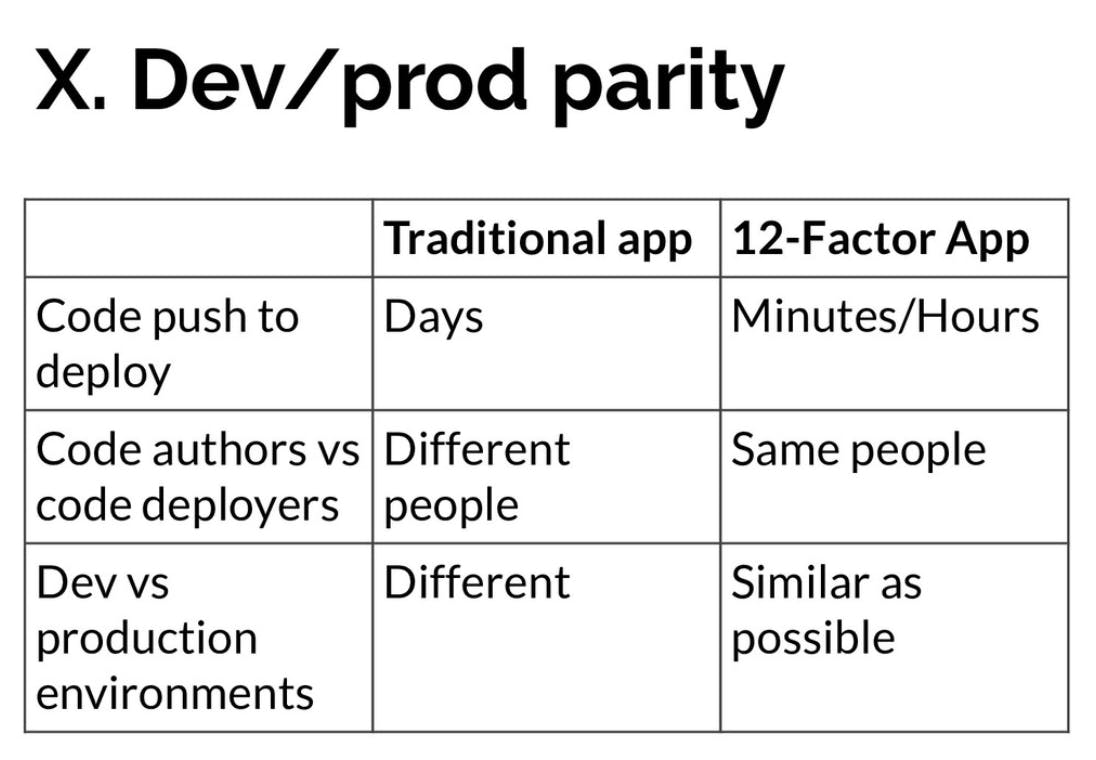

The above measures will ensure that the testing in non-production environments is close to the testing in a real environment. Find below a brief comparison between a traditional app and a twelve-factor app in terms of Dev/production parity.

Once again, containerization provides a natural solution to handle this. In the previous article, we had mentioned single code base, one container image deployments to multiple environments. So building once, and deploying many times is the key here. The same docker image can be used across all the environments.

#Logs

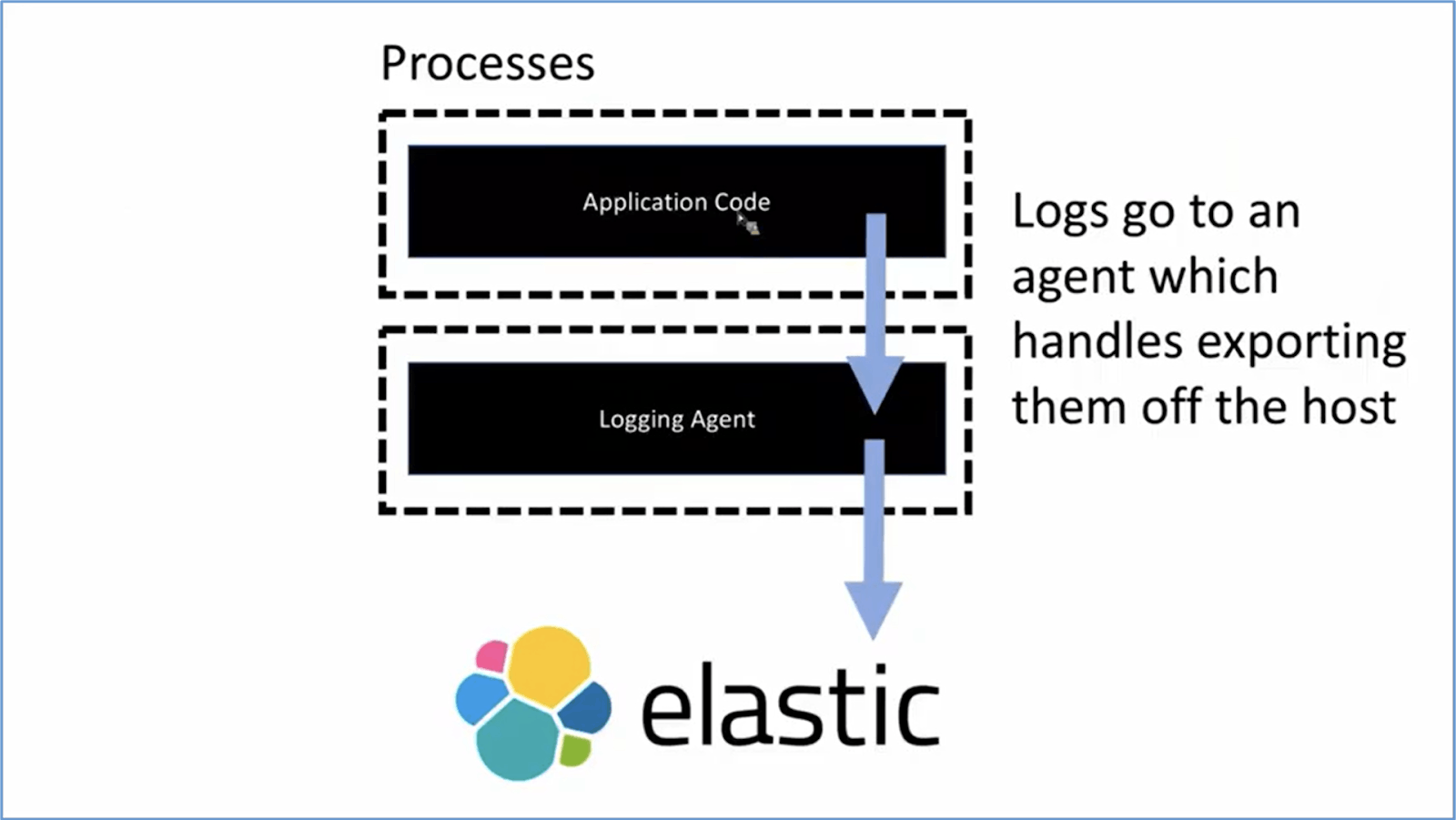

The application itself should not manage application logs. The process of processing and routing the logs should be separate from the application. Consider the scenario where the application process is terminated due to some reason, and if the same service/process manages logs, then the logging would also be terminated. So logging should be implemented as a separate service and should run in its own process, separated from the core application.

If you look at the image above, you will see that the logging agent is implemented as a separate process away from the application itself. The storage, capture, and archival are not the application's responsibility. The application will just transmit the logs to the logging agent as long as the application is running. In other words, the application has outsourced the logging features to another component (logging agent).

For AWS ECS, you can mention the awslogs log driver in your task definition under the logConfiguration object. This will transmit the stdout and stderr I/O streams to your desired log group in CloudWatch logs.

Admin Processes

Any admin or maintenance tasks should not be part of the core application; they should be in a separate process, preferably in an environment identical to the application itself. In containerized applications, one of the strategies to achieve this is through the use of sidecar patterns. Such tasks include data migration, daily email reporting, syncing data from production to staging, etc.

In ECS, you can make use of scheduled ECS tasks. This admin ECS task should be in its own container and run as a separate single process. After the Adhoc job is completed, it will gracefully exit.

#Conclusion

In this article, we discussed areas of port binding, concurrency, disposability, dev/production parity, logging, and admin processes according to twelve-factor guidelines. Containerized microservices are becoming popular because of their built-in support for scalability, lightweight, reliability, resilience, etc. We also evaluated how to make the best use of AWS ECS to support these best practices. However, implementing containerized microservices on AWS requires more than basic knowledge and technical expertise. This is where Qovery comes in. You can take advantage of all the benefits of containerized microservices that too implement the twelve-factor methodology without worrying about the technical complexity of container-based microservices.

Your Favorite DevOps Automation Platform

Qovery is a DevOps Automation Platform Helping 200+ Organizations To Ship Faster and Eliminate DevOps Hiring Needs

Try it out now!

Your Favorite DevOps Automation Platform

Qovery is a DevOps Automation Platform Helping 200+ Organizations To Ship Faster and Eliminate DevOps Hiring Needs

Try it out now!