What is Kubernetes Architecture?

Kubernetes is an open-source platform designed to automate deploying, scaling, and managing containerized applications. It groups containers that make up an application into logical units for easy management and discovery. Understanding the architecture of Kubernetes is crucial for anyone who works with this platform. It helps you to better understand how different components of a Kubernetes cluster interact with each other and how applications are run on this platform. In this article, we will discuss Kubernetes architecture in detail. We will explore its various components, and their interactions, and provide example configurations to explain their functionality. Stay tuned to gain a comprehensive understanding of Kubernetes architecture!

Morgan Perry

February 6, 2024 · 10 min read

#Kubernetes Architecture Overview

#Master-Slave Architecture

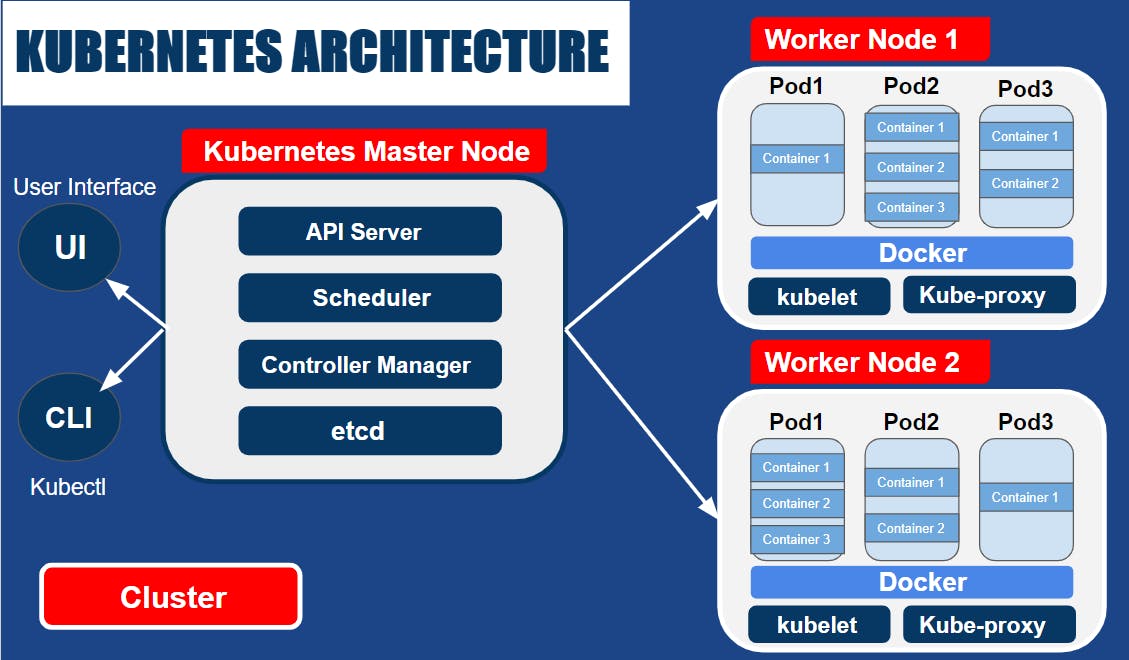

Kubernetes follows a master-slave architecture. Here’s a simple explanation:

- Master Node: The master node is the control plane of Kubernetes. It makes global decisions about the cluster (like scheduling), and it detects and responds to cluster events (like starting up a new pod when a deployment’s replicas field is unsatisfied).

- Worker Nodes: Worker nodes are the machines where your applications run. Each worker node runs at least:

- Kubelet is a process responsible for communication between the Kubernetes Master and the node; it manages the pods and the containers running on a machine.

- A container runtime (like Docker, rkt), is responsible for pulling the container image from a registry, unpacking the container, and running the application.

The master node communicates with worker nodes and schedules pods to run on specific nodes.

The below diagram illustrates how the master node works with worker nodes and what are different components inside worker nodes.

#Core Components

Here are the main components of Kubernetes:

- Pods: A Pod is the smallest and simplest unit in the Kubernetes object model that you create or deploy. A Pod represents a running process on your cluster and can contain one or more containers.

- Services: A Kubernetes Service is an abstraction that defines a logical set of Pods and a policy by which to access them - sometimes called a micro-service.

- Volumes: A Volume is essentially a directory accessible to all containers running in a pod. It can be used to store data and the state of applications.

- Namespaces: Namespaces are a way to divide cluster resources between multiple users. They provide a scope for names and can be used to divide cluster resources between multiple users.

- Deployments: A Deployment controller provides declarative updates for Pods and ReplicaSets. You describe a desired state in a Deployment, and the Deployment controller changes the actual state to the desired state at a controlled rate.

#Master Components

In Kubernetes, the master components make global decisions about the cluster, and they detect and respond to cluster events. Let’s discuss each of these components in detail.

#API Server

The API Server is the front end of the Kubernetes control plane. It exposes the Kubernetes API, which is used by external users to perform operations on the cluster. The API Server processes REST operations validates them, and updates the corresponding objects in etcd.

#etcd

etcd is a consistent and highly-available key value store used as Kubernetes’ backing store for all cluster data. It’s a database that stores the configuration information of the Kubernetes cluster, representing the state of the cluster at any given point of time. If any part of the cluster changes, etcd gets updated with the new state.

#Scheduler

The Scheduler is a component of the Kubernetes master that is responsible for selecting the best node for the pod to run on. When a pod is created, the scheduler decides which node to run it on based on resource availability, constraints, affinity and anti-affinity specifications, data locality, inter-workload interference, and deadlines.

#Controller Manager

The Controller Manager is a daemon that embeds the core control loops shipped with Kubernetes. In other words, it regulates the state of the cluster and performs routine tasks to maintain the desired state. For example, if a pod goes down, the Controller Manager will notice this and start a new pod to maintain the desired number of pods.

Here is an example output of command kubectl get componentstatuses. This command checks the health of the core components including the scheduler, controller-manager, and etcd server. This command is deprecated in newer versions of Kubernetes.

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}#Node Components

Kubernetes worker nodes host the pods that are the components of the application workload. The key components of a worker node include the Kubelet, the main Kubernetes agent on the node, the Kube-proxy, the network proxy, and the container runtime, which runs the containers. Let’s discuss them in detail.

#Kubelet

Kubelet is the primary "node agent" that runs on each node. Its main job is to ensure that containers are running in a Pod. It watches for instructions from the Kubernetes Control Plane (the master components) and ensures the containers described in those instructions are running and healthy.

The Kubelet takes a set of PodSpecs (which are YAML or JSON files describing a pod) and ensures that the containers described in those PodSpecs are running and healthy.

#Kube-proxy

Kube-proxy is a network proxy that runs on each node in the cluster, implementing part of the Kubernetes Service concept. It maintains network rules that allow network communication to your Pods from network sessions inside or outside of your cluster.

Kube-proxy ensures that the networking environment (routing and forwarding) is predictable and accessible, but isolated where necessary.

#Container Runtime

Container runtime is the software responsible for running containers. Kubernetes supports several container runtimes, including Docker, containerd, CRI-O, and any implementation of the Kubernetes CRI (Container Runtime Interface).

Each runtime offers different features, but all must be able to run containers according to a specification provided by Kubernetes.

#How components interact when a pod is scheduled on a node

- Scheduling the Pod: When you create a pod, the Kubernetes Control Plane selects a node for the pod to run on.

- Kubelet's Role: Once the pod is assigned to a node, the Kubelet on that node is informed that a new pod has been assigned to it. The Kubelet reads the PodSpec, pulls the required container images, and starts the containers.

- Container Runtime: The container runtime on the node then runs the containers based on the specifications provided by the Kubelet.

- Kube-proxy and Networking: Meanwhile, Kube-proxy updates the node's network rules to allow communication to and from the containers in the pod according to the Service definitions.

#Pods and Services

#Pods

In Kubernetes, a Pod is the smallest deployable unit of computing that can be created and managed. It represents a single instance of a running process in a cluster and can contain one or more containers.

#Services

A Service in Kubernetes is an abstract way to expose an application running on a set of Pods as a network service. It groups a set of pod endpoints (IP addresses) together to enable communication without needing to know a lot about the network topology.

#Sample configuration

Here’s a simple example of a Pod and Service definition in YAML:

# Pod definition

apiVersion: v1

kind: Pod

metadata:

name: my-app

spec:

containers:

- name: my-app

image: my-app:1.0

---

# Service definition

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 9376In this example, the Pod my-app is running a single container using the my-app:1.0 image. The Service my-service exposes the application on port 80 and routes network traffic to the my-app Pod on port 9376.

#Volumes and Persistent Storage

#What are Volumes and Persistent Storage

In Kubernetes, managing storage is a distinct concept from managing compute instances. Let’s dive into it.

A Volume in Kubernetes is a directory, possibly with some data in it, which is accessible to the containers in a pod. It’s a way for pods to store and share data. A volume’s lifecycle is tied to the pod that encloses it.

A Persistent Volume (PV) is a piece of storage in the cluster that has been provisioned by an administrator or dynamically provisioned using Storage Classes. It is a resource in the cluster just like a node is a cluster resource.

#How Kubernetes Handles Storage and Data Persistence

Kubernetes uses Persistent Volume Claims (PVCs) to handle storage and data persistence. A PVC is a request for storage by a user. It is similar to a pod in that pods consume node resources and PVCs consume PV resources.

#Example configuration snippet

Here’s an example of a PersistentVolume and PersistentVolumeClaim in YAML:

# PersistentVolume

apiVersion: v1

kind: PersistentVolume

metadata:

name: my-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: slow

hostPath:

path: "/mnt/data"

---

# PersistentVolumeClaim

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc

spec:

storageClassName: slow

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 500MiIn this example, a PersistentVolume named my-pv is created with a storage capacity of 1Gi. A PersistentVolumeClaim named my-pvc is also created, which requests storage of 500Mi. The my-pvc claim will bind to the my-pv volume.

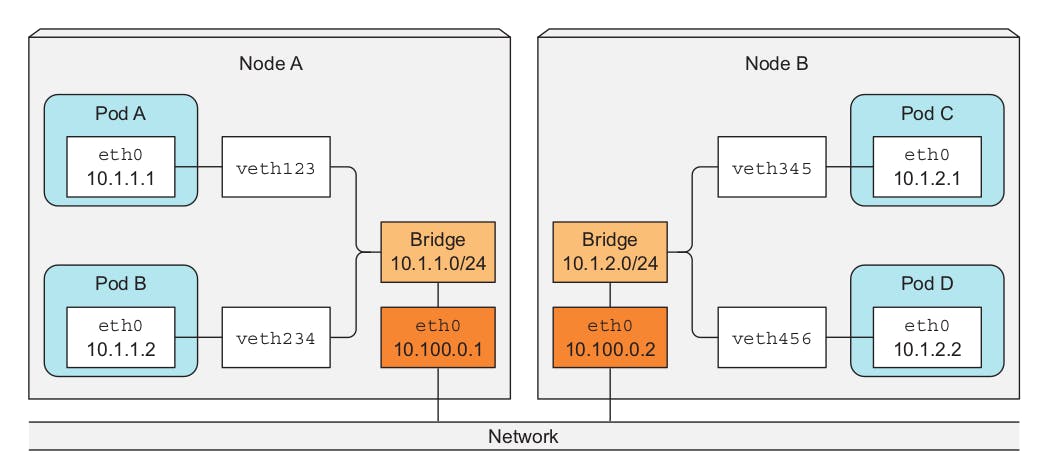

#Networking in Kubernetes

Networking is a central part of Kubernetes, but it can be challenging to understand exactly how it is expected to work. Let’s dive into it.

#Overview of Networking Concepts in Kubernetes

In Kubernetes, every pod has a unique IP address, and every node has its own IP address as well. This ensures clear communication and prevents overlap. Here are some key concepts:

- Pod-to-Pod networking: Each Pod is assigned a unique IP. All containers within a Pod share the network namespace, including the IP address and network ports.

- Service networking: A service in Kubernetes is an abstraction that defines a logical set of Pods and a policy by which to access them. Services enable loose coupling between dependent Pods.

- Ingress networking: Ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster.

#Network Policies and Ingress

Network Policies in Kubernetes provide a way of managing connections to pods based on labels and ports. It’s like a firewall for your pod, defining who can or cannot connect to it.

Ingress, on the other hand, manages external access to the services in a cluster, typically HTTP. Ingress can provide load balancing, SSL termination, and name-based virtual hosting.

The below diagram illustrates pod-to-pod communication.

#Workloads

In Kubernetes, a workload is an application or a part of an application running on Kubernetes. Let’s discuss different types of workloads.

#Deployments

A Deployment provides declarative updates for Pods and ReplicaSets. You describe the desired state in a Deployment, and the Deployment controller changes the actual state to the desired state.

#StatefulSets

StatefulSets are used for workloads that need stable network identifiers, stable persistent storage, and graceful deployment and scaling.

#DaemonSets

A DaemonSet ensures that all (or some) nodes run a copy of a pod. As nodes are added to the cluster, pods are added to them. As nodes are removed from the cluster, those pods are garbage collected.

#Jobs

A Job creates one or more pods and ensures that a specified number of them successfully terminate. It’s perfect for tasks that eventually completed.

#Example configuration

Here’s an example of a Deployment in YAML:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80In this example, a Deployment named nginx-deployment is created, which spins up 3 replicas of nginx pods.

#ConfigMaps and Secrets

#Managing Application Configurations and Sensitive Information

In Kubernetes, ConfigMaps and Secrets are two key components that allow us to manage application configurations and sensitive information.

- ConfigMaps: These are used to store non-confidential data in key-value pairs. ConfigMaps allow you to decouple configuration artifacts from image content to keep containerized applications portable.

- Secrets: These are similar to ConfigMaps, but are used to store sensitive information like passwords, OAuth tokens, and ssh keys. Storing confidential information in a Secret is safer and more flexible than putting it verbatim in a Pod definition or in a container image.

#Example configuration snippet: creating a ConfigMap and Secret from literal values

Here’s how you can create a ConfigMap and a Secret using literal values:

# Create a ConfigMap from literal values

kubectl create configmap example-config --from-literal=key1=value1 --from-literal=key2=value2

# Create a Secret from literal values

kubectl create secret generic example-secret --from-literal=username=admin --from-literal=password=secretIn the above commands, example-config and example-secret are the names of the ConfigMap and Secret respectively. The --from-literal flag is used to specify the key-value pairs for the ConfigMap and Secret.

#Conclusion

Hope you've appreciated this comprehensive overview of Kubernetes architecture, detailing its master-slave structure, core components like Pods, Services, and Volumes, and node components such as Kubelet and Kube-proxy. This article emphasizes Kubernetes' ability to automate containerized application deployment, scaling, and management, ensuring efficient and secure operations. A platform like Qovery simplifies Kubernetes complexities, offering tools that enable developers to manage Kubernetes effortlessly, focusing on development rather than infrastructure challenges. Try Qovery for free and get rid of the hassles of typical Kubernetes management.

Your Favorite Internal Developer Platform

Qovery is an Internal Developer Platform Helping 50.000+ Developers and Platform Engineers To Ship Faster.

Try it out now!

Your Favorite Internal Developer Platform

Qovery is an Internal Developer Platform Helping 50.000+ Developers and Platform Engineers To Ship Faster.

Try it out now!