How we manage CI sensitive data for our Open Source deployment Engine

At Qovery, we're developing an Open Source software called "Qovery Engine". This project is hosted on GitHub (https://github.com/Qovery/engine) and is used to deploy:

- Kubernetes infrastructure based on Cloud providers (AWS, Scaleway, DigitalOcean...)

- Cloud managed services (PostgreSQL, Redis, MongoDB., etc.)

- Containers on Kubernetes

- And many other smaller pieces to manage the whole infrastructure

We have to run several kinds of tests for this project:

- Unit tests: for unit tests without any dependencies

- Functional tests: tests to validate a more global behavior with external providers dependencies, requiring specific access (Cloud providers, Let's Encrypt, Cloudflare, etc.)

- End to end tests: to validate the platform from a user point of view. In addition to third party providers, we also need to have access to our Qovery API

We're using GitHub action to run some tests, but we can't run them all publicly for security reasons. You'll find in this article choices we've made to manage sensitive data and tradeoffs.

Dedicated runners and security

We're using our runners for the CI, speeding up Rust builds with more CPU and parallelized tests.

From a security point of view, it's a nightmare to manage security for an Open Source project with third-party Cloud providers' credentials. Why? Because we would like to allow anyone, to submit a PR for a contribution. But all those tests logs, required to help understand when something goes wrong, must not be shown, either accessible at any cost.

But it's not possible as there are several places where that sensitive information could be stolen once they've been used (Terraform Statefile, Kubernetes secrets on a helm chart rendering, etc.).

There are several places in the GitHub documentation where GitHub warns about security risks to leak sensitive data:

Vault with tests

First of all, let's see how we avoid secrets (passwords/tokens, etc.) falling into the code.

For functional tests, we're using ~40 secrets today, stored in a Vault cluster, which frequently rotates to give you an idea. This number grows over time when we add new cloud providers, managed services, tokens, and several other sensitive data. If you're familiar with Cloud providers, you can see critical data in the list:

We're using Vault to keep those secrets accessible only for Qovery staff. So they can easily access all that information directly when they want to run functional tests from their workstation, without having to worry about their availability, changes, etc. Here is a short part of the implementation:

Note: you can find the complete implementation in the Qovery Engine repository on GitHub.

It's a massive win of time for our teammates and simplification on the usage.

But how do contributors access them? That's the point! We don't want the world to have access to those secrets!

Here comes the tradeoff! We allow anyone to build, run unit tests and linter checks, but functional tests logs can only be run and seen by the Qovery team. We're going to see how we did it.

Gitlab pipelines coupled with Github Action

To summarize, we have our code on GitHub and functional tests pipelines on Gitlab. But unit tests are made GitHub side.

GitHub Action

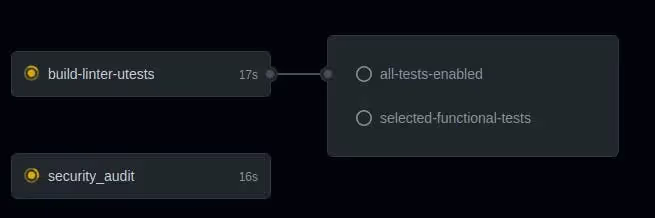

So GitHub side, here is the pipeline we have:

As you can see, there are several kinds of jobs. The first part is fully open to the world:

- build-linter-utests: we perform the build with linting and unit tests on the same job.

- security_audit: checks automatically if there are vulnerabilities discovered in the Rust libs we're using

Then we have the restricted part, where we're going to use Gitlab Pipelines:

- selected-functional-tests: it runs functional tests

- all-tests-enabled: ensure all tests are enabled. Useful to deny any PR without all tests activated (we'll see later how we filter on them)

You can find the complete GitHub action configuration there.

Gitlab pipelines

There we have the private part. When GitHub action gets into the "selected-functional-tests" job, it triggers the Gitlab API to request to run a pipeline with multiple jobs like:

Only people members of the GitHub Qovery organization can run those tests with specific permissions on our side. We have a security check GitHub action side and Gitlab side to ensure members can run this Gitlab pipeline.

In this Gitlab pipeline, you can see multiple jobs. The first column is simple:

- Build: not exactly like on GitHub because we add some Qovery magic sauce here

- Linter: now the linter will check as well with Qovery magic sauce added

Then have all the tests split by Qovery supported cloud providers (AWS, DigitalOcean, and Scaleway at the moment I'm writing those lines):

- Infrastructure: we run here entire infrastructures' deployments, upgrade, pause and destroy

- Self-hosted: we deploy fake containers apps with databases, caches, routing, etc.

- Managed Services: we deploy fake containers apps with managed services (RDS, Elasticache...) to validate connectivity and access easiness made by Qovery magic sauce

Filter tests from GitHub

All those tests on Gitlab are taking time (mostly infra tests). It can take up to 2h in total. Even they are all running in parallel on multiple containers.

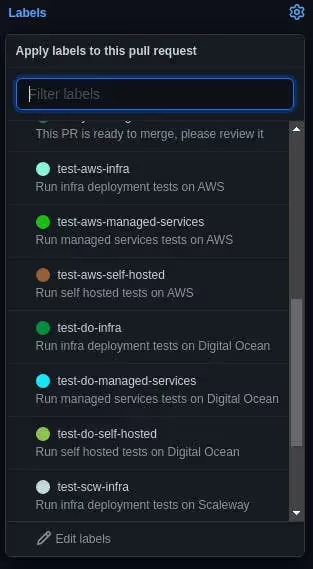

From a Github Pull Request, we can select the tests we want to run to reduce the time to test. To do so, we're using GitHub labels to filter functional tests:

When the "functional tests" job starts, selected labels will be used to filter tests to run Gitlab side. Calling the Gitlab API pipeline with variables containing the desired tests makes it possible.

As you can see on the screenshot, all tests are running there; it's the default behavior when no Github labels are selected.

When several tests are selected, they are separated by commas, and then we can automatically run those specific ones Gitlab side.

Contribution and tests

When we have a contribution, and we need to run functional tests to validate a PR, we duplicate it to allow the requester (Qovery team member) to run those tests and finally merge it.

Side by side branch updates

Sometimes we have to do some tests on a specific branch on Github, but as well need to add code on the Gitlab repo for the testing part. How do we manage that?

You may notice, we have a variable (Gitlab side) called "GITHUB_ENGINE_BRANCH_NAME". When Github triggers a Gitlab job, it will send the current branch name. If the same branch name exists on Gitlab and Github sides, it will use this branch to run tests.

Conclusion

Doing this kind of implementation took time. We do not regret to have invested in it even if it's not perfect because contributors are blind for the log part. It forced us to think differently, and adding Vault inside a project for testing purposes is very pleasant. All of this is the tradeoff we've made to have security and tests validated publicly.

Useful links

Suggested articles

.webp)

.svg)

.svg)

.svg)