Our migration from Kubernetes Built-in NLB to ALB Controller

Key Points:

- The Maintenance Dead-End: Kubernetes’ built-in NLB is considered a legacy codebase that is no longer actively maintained by AWS, leading to persistent bugs where resources aren't properly cleaned up after deletion.

- Operational Workarounds: Before migrating, the Qovery team had to manually instrument the Qovery Engine to deal with orphaned load balancers that the native Kubernetes implementation failed to remove.

- Feature Gaps: The transition was necessitated by the need for advanced networking features not supported by the built-in NLB, specifically the PROXY protocol for IP preservation and fine-grained target group attributes.

- Migration Complexity: AWS does not provide a transparent path to move from the built-in NLB to the ALB Controller; it requires managing new NLB availability times and potentially disruptive CNAME changes.

Working with Kubernetes Services is convenient, especially when you can deploy Load Balancers via cloud providers like AWS. At Qovery, we initially started with Kubernetes’ built-in Network Load Balancer (NLB). However, we decided to move to the AWS Load Balancer Controller (ALB Controller).

In this article, I explain why we made this switch and how it benefits our infrastructure. We will discuss the reasons for the transition, the features of the ALB Controller, and provide a guide for deploying it. This shift has helped us simplify management, reduce costs, and enhance performance. By understanding these points, you can decide if the ALB Controller is right for your Kubernetes setup.

Important consideration: We should have been using it from the beginning, as migrating can be a pain, depending on your configuration. Moving to it from day one or as early as possible would have been the best!

Why did we start with the NLB controller

For our customers and several technical people we discussed this with, NLB is the default choice because:

- Ease of Use: Simple to configure and use with built-in Kubernetes service annotations.

- Kubernetes Native: Uses Kubernetes-native objects, reducing the need for AWS-specific knowledge.

- Cloud-Agnostic: It is easier to migrate to other cloud providers or on-premises environments without deep AWS integration. As we support multiple cloud providers in the managed offering, we must maintain maximum transparency for our customers and be able to port functionalities to every supported cloud provider. Without some NLB features, this compatibility is not possible.

- Maintenance: Minimal maintenance overhead compared to managing additional AWS services and controllers.

Why did we move to the ALB controller

Migration to the ALB Controller came late (4 years after we used the built-in NLB). We were able to live without it for a long time.

However, during this time, we faced issues like NLB not being cleaned correctly after deletion (on the Kubernetes side).

AWS support told us they wouldn’t make efforts to make fixes since they developed the ALB Controller. We contacted AWS support and looked at GitHub issues on Kubernetes, and the result is that it’s a legacy part of the Kubernetes code base that is not maintained anymore.

When using Kubernetes built-in NLB, be prepared to manage issues manually 😅.

This is what we did! We manually instrumented our Qovery Engine to manage this kind of issue.

But recently, we wanted to leverage some NLB features not present in Kubernetes's built-in NLB, so we had to move to the ALB Controller.

The move to the ALB Controller brings useful features such as:

- service.beta.kubernetes.io/aws-load-balancer-proxy-protocol: This annotation enables the PROXY protocol on the load balancer. The PROXY protocol preserves the client source IP by adding an extra header with the original IP address.

- service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: This annotation specifies the target type for the Network Load Balancer (NLB). There are two types of targets in NLB: Instance: Targets are EC2 instances. IP: Targets are IP addresses, which can be external or internal.

- service.beta.kubernetes.io/aws-load-balancer-target-group-attributes: This annotation allows you to set attributes on the target groups created by the load balancer. Target group attributes provide fine-tuned control over the behavior of the load balancer.

Moving to the ALB Controller is an old topic for Qovery, as we already raised it a few years back when encountering the issues discussed above.

Why didn’t we move to the ALB Controller before

The main reason is that it was one more thing to maintain on our end. But this time, we had no choice since some features requested by our customers required specific configurations that the native Kubernetes NLB implementation couldn’t handle.

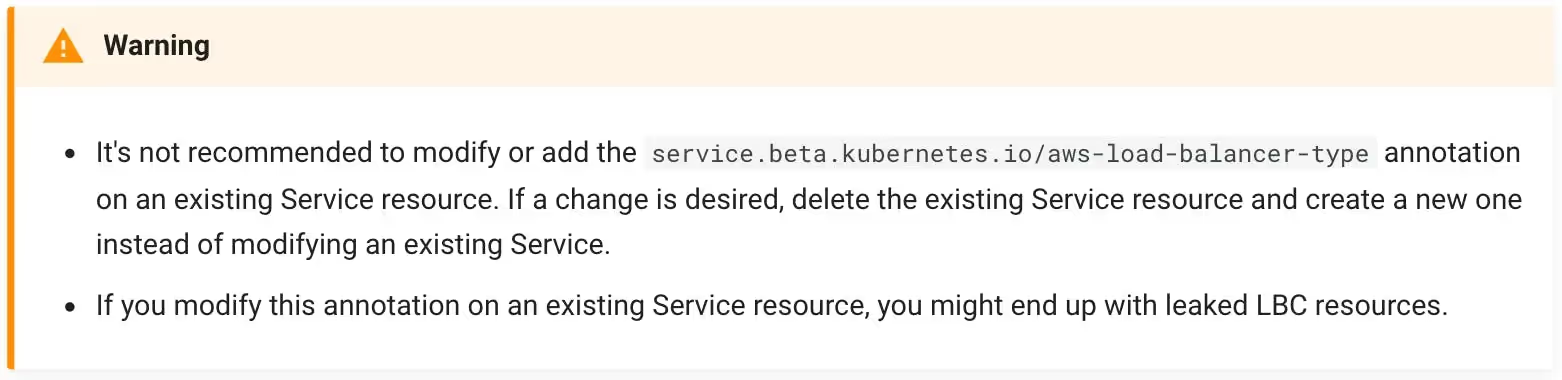

Even if you’re not changing the Load Balancer type (NLB), you, unfortunately, can’t move from Kubernetes's "built-in NLB" to "ALB Controller NLB". cf. documentation:

And obviously, this has been confirmed as well by contacting AWS support 😭.

So if you have DNS CNAME pointing directly to the NLB DNS name (xxx.elb.eu-west-3.amazonaws.com), TLS/SSL certificates associated, or anything directly connected to it, you will have to manage it properly to avoid/reduce downtime as much as possible.

In anticipation, at Qovery, we use our domain on top of the NLB domain name, so we’re not concerned about TLS issues but about CNAME name changes and new NLB availability time 🤩.

However, it’s a shame that the AWS ALB Controller does not manage it transparently. The consequences regarding reliability and time investment for the migration are high. For most companies, it’s a problem. I’m worried that AWS didn’t consider it. Luckily for our customers, it's fully transparent.

Yes, at Qovery, we manage thousands of EKS clusters for our customers. If you are interested in this, read this article.

Deployment

I won’t go into details because several tutorials are already available on the internet. To summarize, here is the Terraform configuration to prepare ALB Controller permissions:

Then deploying the Helm ALB controller chart is not complicated:

Now you’re ready to deploy NLB managed by ALB controller:

If you look into the EC2 console, you should see your named load balancer (here: nginx-ingress).

Conclusion

The ALB Controller offers many features compared to the built-in NLB and allows you to extend usage to ALB if desired.

We hope AWS will include this in the EKS add-ons to simplify lifecycle management and reduce the setup and deployment phase.

Companies should be strongly encouraged to move to the ALB Controller sooner rather than later to avoid a lengthy migration process.

Suggested articles

.webp)

.svg)

.svg)

.svg)