Deploying AI Apps with GPUs on AWS EKS and Karpenter

Why Use AWS EKS with Karpenter

AWS EKS provides a managed Kubernetes service that simplifies running Kubernetes without needing to install, operate, and maintain your own cluster control plane. Combined with Karpenter, an open-source, high-performance Kubernetes cluster autoscaler, you get a flexible and cost-effective solution that can efficiently manage the provisioning and scaling of nodes based on the application's requirements.

Karpenter specifically helps handle variable workloads by provisioning the right resources at the right time, which is ideal for AI applications with sporadic or compute-intensive tasks requiring GPU capabilities. (read this article I wrote to learn more)

Install AWS EKS and Karpenter with Qovery

To begin, you'll need to set up AWS EKS and Karpenter. Qovery integrates seamlessly into your AWS environment, allowing you to set up EKS with Karpenter with just a few clicks:

- Create a Qovery account: connect to the Qovery web console.

- Create AWS EKS: Add your AWS EKS cluster and choose the region and configure your cluster specifications.

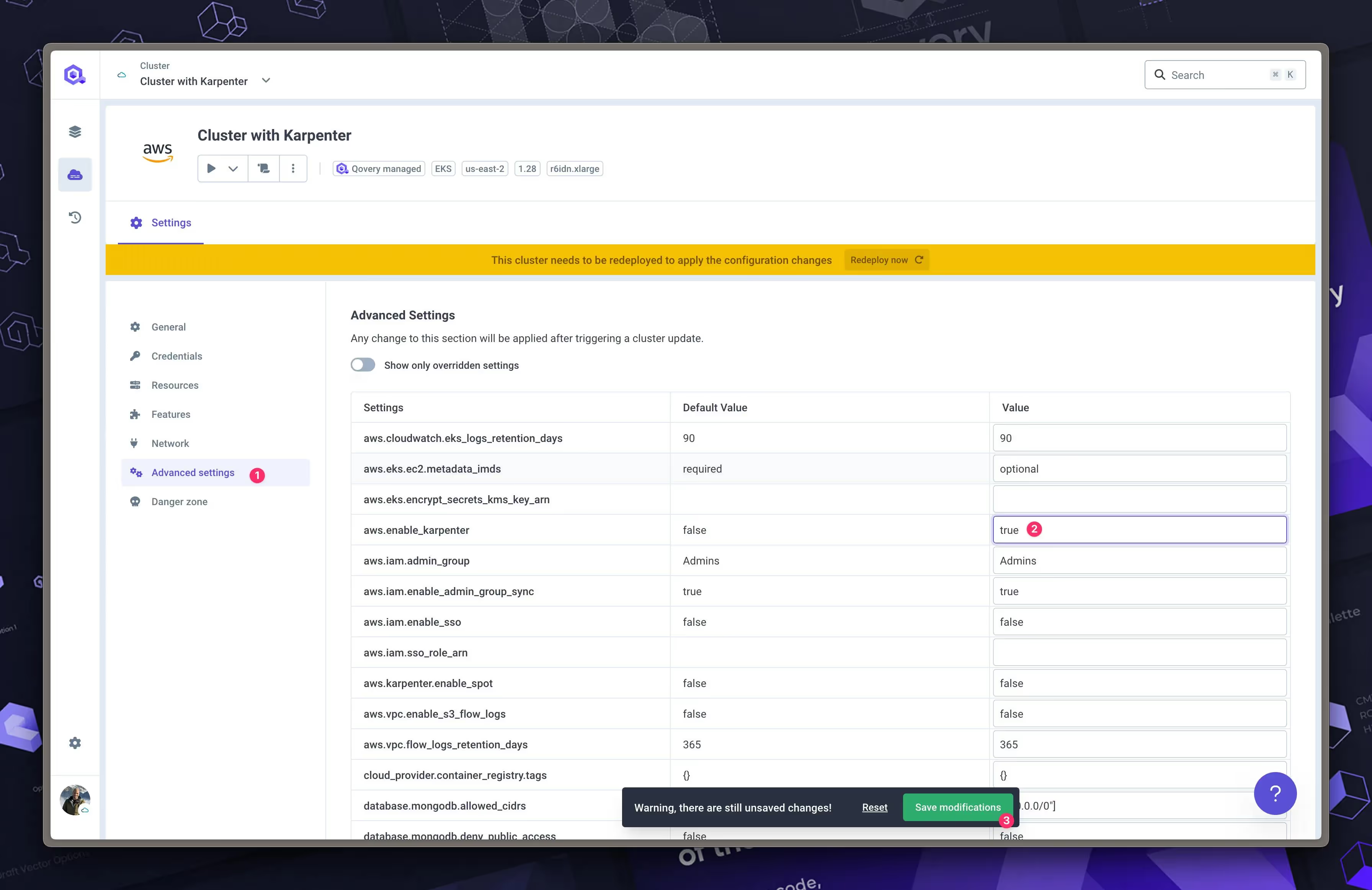

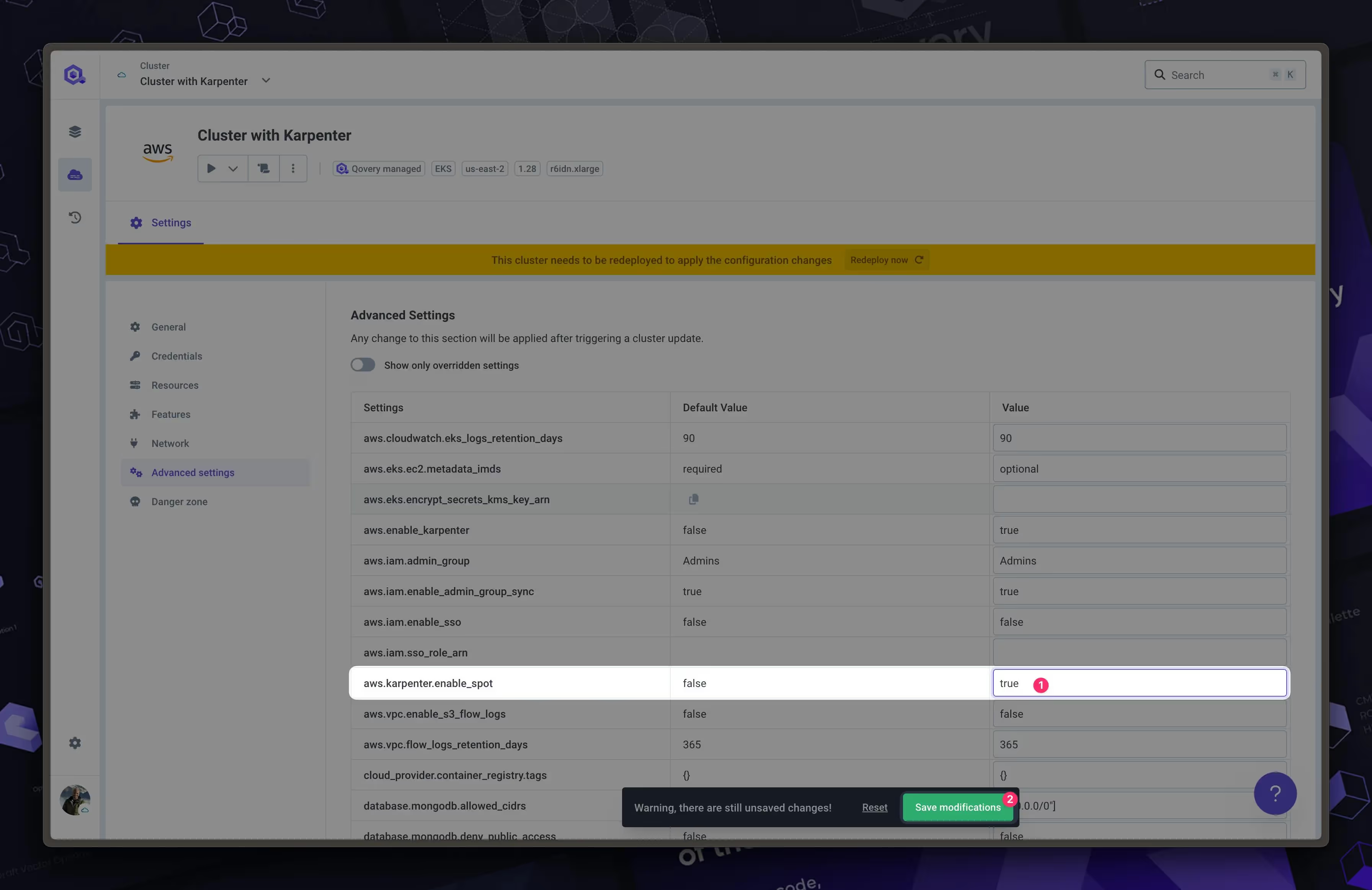

- Enable Karpenter: With the cluster ready, install Karpenter directly from the cluster advanced settings. Qovery automates the integration process, ensuring Karpenter aligns with your EKS settings for optimal performance.

Install NVIDIA device plugin on AWS EKS

The NVIDIA device plugin for Kubernetes is an implementation of the Kubernetes device plugin framework that advertises GPUs as available resources to the kubelet.

This plugin is necessary as it helps manage GPU resources available to Kubernetes pods. For that, we will use the official NVIDIA Helm Chart.

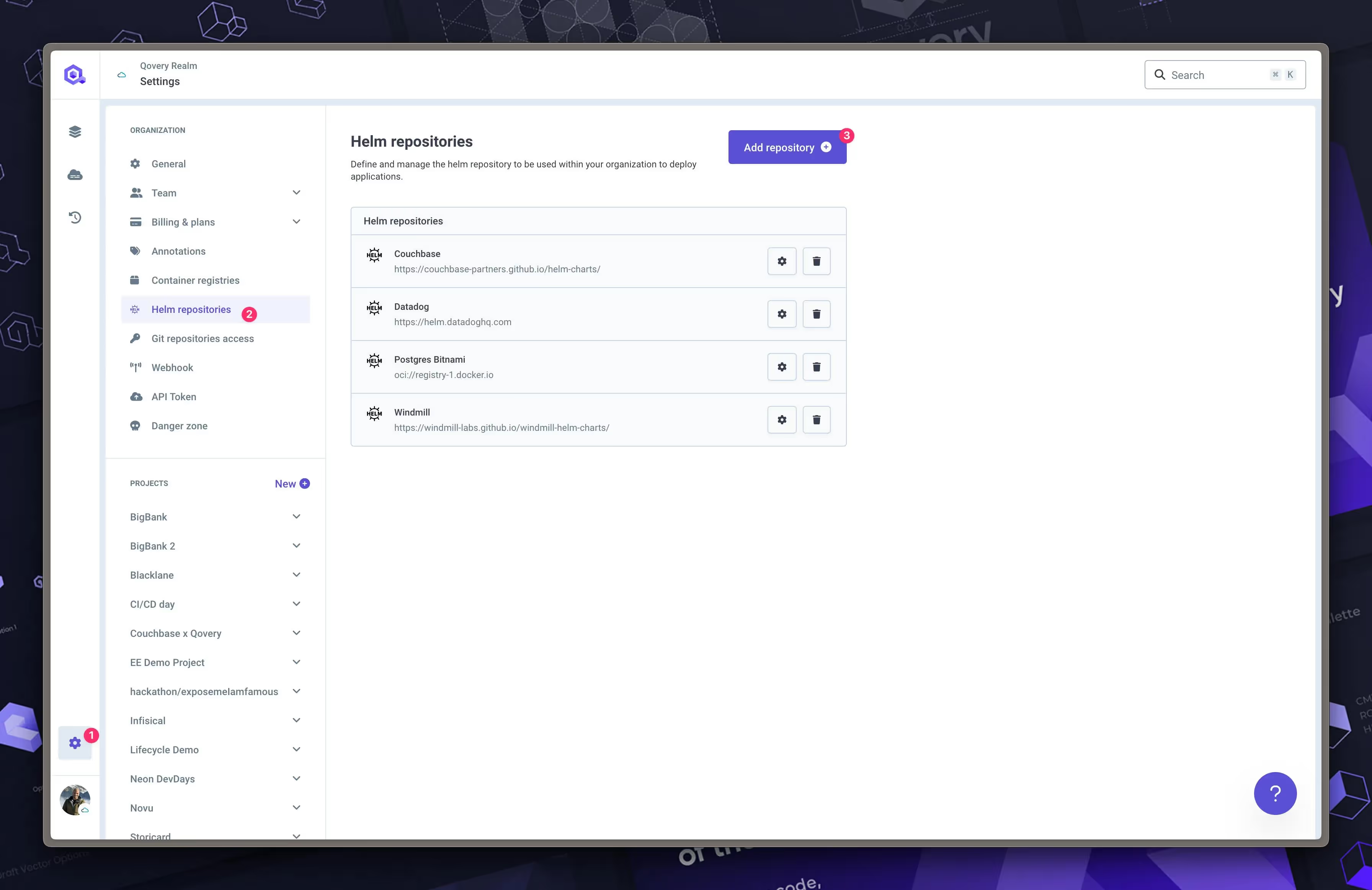

With Qovery, you simply need to navigate to Organization Settings > Helm Repositories > Click "Add repository"

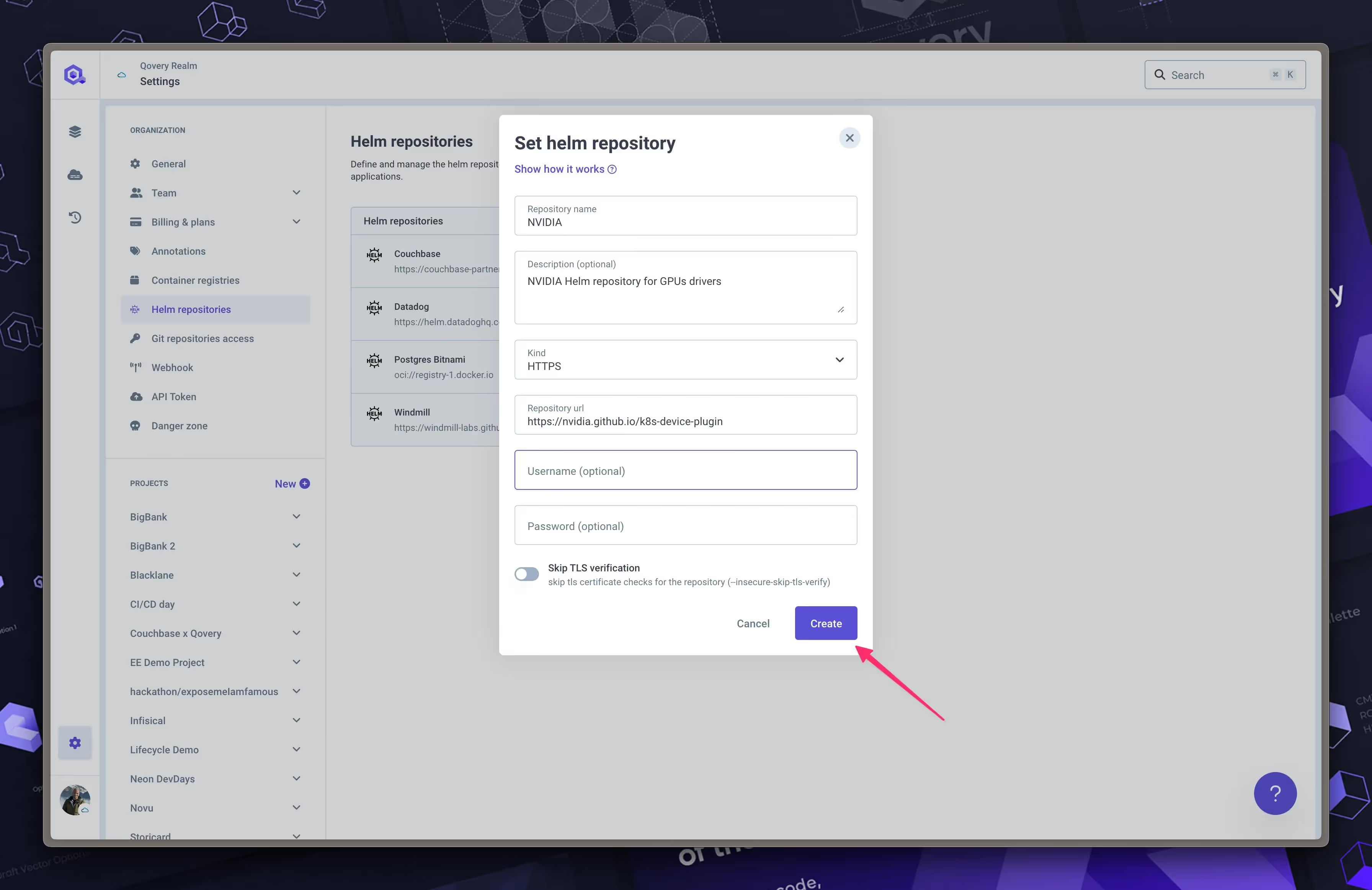

Then register the NVIDIA repository "https://nvidia.github.io/k8s-device-plugin"

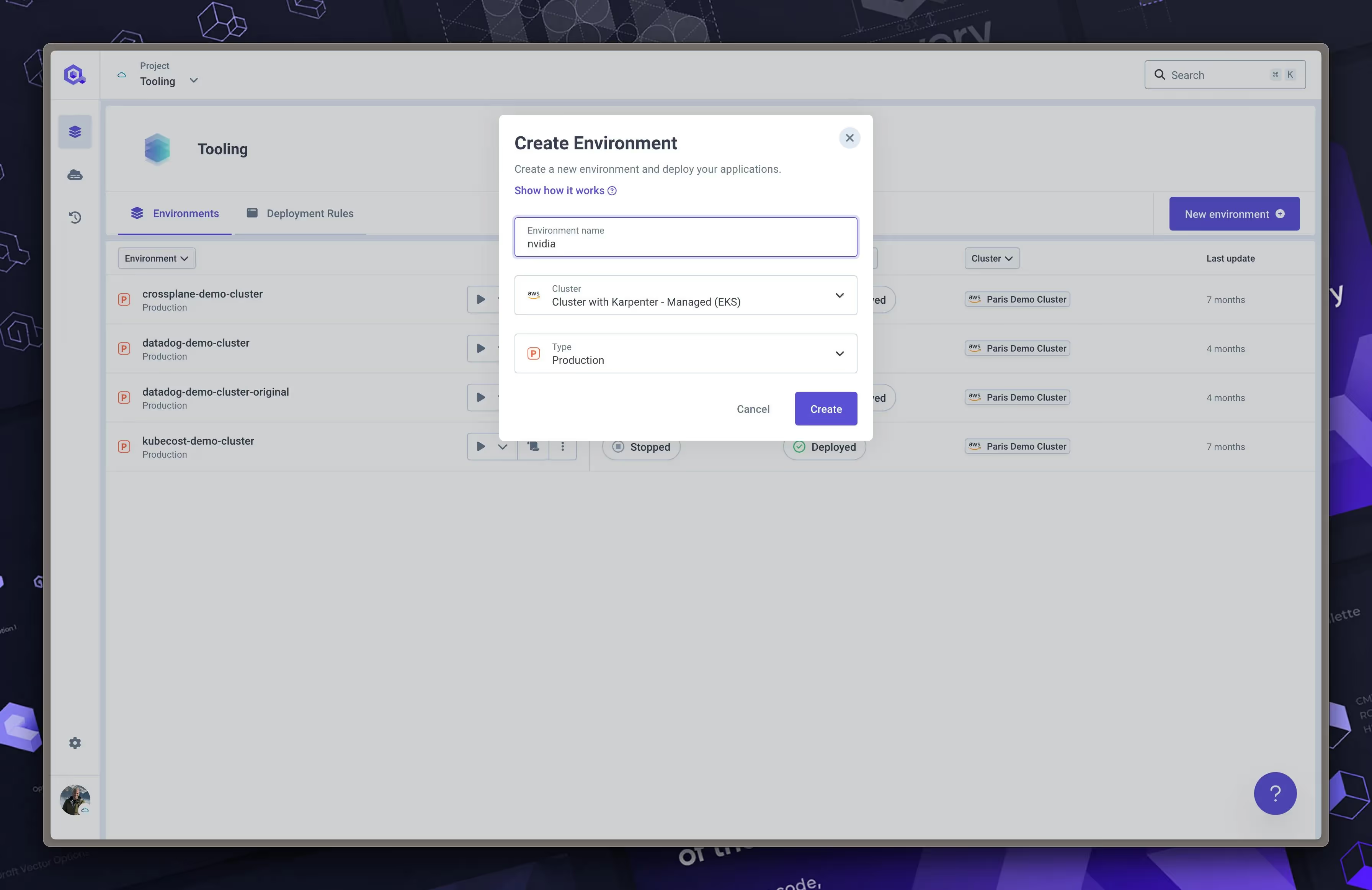

Then, I recommend creating a "Tooling" project with a "NVIDIA" environment. ⚠️ Select your EKS with Karpenter cluster.

Then you can create a Helm service "nvidia device plugin".

Now, you can deploy the "nvidia device plugin" service to install it on your EKS cluster.

Deploy an App Using a GPU

Deploying an AI application that uses a GPU can be streamlined using Qovery's Helm chart capabilities:

- Prepare your application with a Dockerfile and Helm chart: Make sure your application is containerized and ready for deployment.

- Push your code to a Git repository connected to Qovery.

- Use Qovery to deploy your application: Through the Qovery dashboard, set up your application deployment using the Helm chart, which should specify the necessary GPU resources via nodeSelector.

Bonus: Using Spot Instances

To further optimize costs, use AWS Spot Instances for your GPU workloads. With Qovery, you can enable Spot Instances in the cluster's advanced settings:

- Navigate to the cluster advanced settings in Qovery.

- Set "aws.karpenter.enable_spot" to "true". Qovery handles the integration seamlessly, providing cost savings while ensuring resource availability for your applications.

Conclusion

By combining AWS EKS with Karpenter and utilizing Qovery for deployment automation, you can streamline the deployment and management of AI applications that require GPU resources. This setup enhances performance and optimizes costs, making it an excellent choice for developers seeking to deploy AI applications at scale efficiently.

Begin deploying your AI apps today with Qovery and unlock the full potential of cloud-native technologies.

Suggested articles

.webp)

.svg)

.svg)

.svg)